20240604

This commit is contained in:

parent

6061f636f8

commit

64b78bed3b

192

README.md

192

README.md

@ -14,28 +14,35 @@

|

|||||||

|

|

||||||

[](https://discord.gg/HcsmBBGyVk)

|

[](https://discord.gg/HcsmBBGyVk)

|

||||||

|

|

||||||

[**更新日志**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/cn/Changelog_CN.md) | [**常见问题解答**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98%E8%A7%A3%E7%AD%94) | [**AutoDL·5毛钱训练AI歌手**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B) | [**对照实验记录**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%AF%B9%E7%85%A7%E5%AE%9E%E9%AA%8C%C2%B7%E5%AE%9E%E9%AA%8C%E8%AE%B0%E5%BD%95)) | [**在线演示**](https://modelscope.cn/studios/FlowerCry/RVCv2demo)

|

[**更新日志**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/Changelog_CN.md) | [**常见问题解答**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98%E8%A7%A3%E7%AD%94) | [**AutoDL·5毛钱训练AI歌手**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B) | [**对照实验记录**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%AF%B9%E7%85%A7%E5%AE%9E%E9%AA%8C%C2%B7%E5%AE%9E%E9%AA%8C%E8%AE%B0%E5%BD%95)) | [**在线演示**](https://modelscope.cn/studios/FlowerCry/RVCv2demo)

|

||||||

|

|

||||||

|

[**English**](./docs/en/README.en.md) | [**中文简体**](./README.md) | [**日本語**](./docs/jp/README.ja.md) | [**한국어**](./docs/kr/README.ko.md) ([**韓國語**](./docs/kr/README.ko.han.md)) | [**Français**](./docs/fr/README.fr.md) | [**Türkçe**](./docs/tr/README.tr.md) | [**Português**](./docs/pt/README.pt.md)

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

------

|

|

||||||

|

|

||||||

[**English**](./docs/en/README.en.md) | [**中文简体**](./README.md) | [**日本語**](./docs/jp/README.ja.md) | [**한국어**](./docs/kr/README.ko.md) ([**韓國語**](./docs/kr/README.ko.han.md)) | [**Français**](./docs/fr/README.fr.md)| [**Türkçe**](./docs/tr/README.tr.md)

|

|

||||||

|

|

||||||

点此查看我们的[演示视频](https://www.bilibili.com/video/BV1pm4y1z7Gm/) !

|

|

||||||

|

|

||||||

训练推理界面:go-web.bat

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

实时变声界面:go-realtime-gui.bat

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

> 底模使用接近50小时的开源高质量VCTK训练集训练,无版权方面的顾虑,请大家放心使用

|

> 底模使用接近50小时的开源高质量VCTK训练集训练,无版权方面的顾虑,请大家放心使用

|

||||||

|

|

||||||

> 请期待RVCv3的底模,参数更大,数据更大,效果更好,基本持平的推理速度,需要训练数据量更少。

|

> 请期待RVCv3的底模,参数更大,数据更大,效果更好,基本持平的推理速度,需要训练数据量更少。

|

||||||

|

|

||||||

|

<table>

|

||||||

|

<tr>

|

||||||

|

<td align="center">训练推理界面</td>

|

||||||

|

<td align="center">实时变声界面</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/092e5c12-0d49-4168-a590-0b0ef6a4f630"></td>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/730b4114-8805-44a1-ab1a-04668f3c30a6"></td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">go-web.bat</td>

|

||||||

|

<td align="center">go-realtime-gui.bat</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">可以自由选择想要执行的操作。</td>

|

||||||

|

<td align="center">我们已经实现端到端170ms延迟。如使用ASIO输入输出设备,已能实现端到端90ms延迟,但非常依赖硬件驱动支持。</td>

|

||||||

|

</tr>

|

||||||

|

</table>

|

||||||

|

|

||||||

## 简介

|

## 简介

|

||||||

本仓库具有以下特点

|

本仓库具有以下特点

|

||||||

+ 使用top1检索替换输入源特征为训练集特征来杜绝音色泄漏

|

+ 使用top1检索替换输入源特征为训练集特征来杜绝音色泄漏

|

||||||

@ -47,47 +54,55 @@

|

|||||||

+ 使用最先进的[人声音高提取算法InterSpeech2023-RMVPE](#参考项目)根绝哑音问题。效果最好(显著地)但比crepe_full更快、资源占用更小

|

+ 使用最先进的[人声音高提取算法InterSpeech2023-RMVPE](#参考项目)根绝哑音问题。效果最好(显著地)但比crepe_full更快、资源占用更小

|

||||||

+ A卡I卡加速支持

|

+ A卡I卡加速支持

|

||||||

|

|

||||||

|

点此查看我们的[演示视频](https://www.bilibili.com/video/BV1pm4y1z7Gm/) !

|

||||||

|

|

||||||

## 环境配置

|

## 环境配置

|

||||||

以下指令需在 Python 版本大于3.8的环境中执行。

|

以下指令需在 Python 版本大于3.8的环境中执行。

|

||||||

|

|

||||||

(Windows/Linux)

|

### Windows/Linux/MacOS等平台通用方法

|

||||||

首先通过 pip 安装主要依赖:

|

下列方法任选其一。

|

||||||

|

#### 1. 通过 pip 安装依赖

|

||||||

|

1. 安装Pytorch及其核心依赖,若已安装则跳过。参考自: https://pytorch.org/get-started/locally/

|

||||||

```bash

|

```bash

|

||||||

# 安装Pytorch及其核心依赖,若已安装则跳过

|

|

||||||

# 参考自: https://pytorch.org/get-started/locally/

|

|

||||||

pip install torch torchvision torchaudio

|

pip install torch torchvision torchaudio

|

||||||

|

```

|

||||||

#如果是win系统+Nvidia Ampere架构(RTX30xx),根据 #21 的经验,需要指定pytorch对应的cuda版本

|

2. 如果是 win 系统 + Nvidia Ampere 架构(RTX30xx),根据 #21 的经验,需要指定 pytorch 对应的 cuda 版本

|

||||||

#pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

```bash

|

||||||

|

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

||||||

|

```

|

||||||

|

3. 根据自己的显卡安装对应依赖

|

||||||

|

- N卡

|

||||||

|

```bash

|

||||||

|

pip install -r requirements.txt

|

||||||

|

```

|

||||||

|

- A卡/I卡

|

||||||

|

```bash

|

||||||

|

pip install -r requirements-dml.txt

|

||||||

|

```

|

||||||

|

- A卡ROCM(Linux)

|

||||||

|

```bash

|

||||||

|

pip install -r requirements-amd.txt

|

||||||

|

```

|

||||||

|

- I卡IPEX(Linux)

|

||||||

|

```bash

|

||||||

|

pip install -r requirements-ipex.txt

|

||||||

```

|

```

|

||||||

|

|

||||||

可以使用 poetry 来安装依赖:

|

#### 2. 通过 poetry 来安装依赖

|

||||||

|

安装 Poetry 依赖管理工具,若已安装则跳过。参考自: https://python-poetry.org/docs/#installation

|

||||||

```bash

|

```bash

|

||||||

# 安装 Poetry 依赖管理工具, 若已安装则跳过

|

|

||||||

# 参考自: https://python-poetry.org/docs/#installation

|

|

||||||

curl -sSL https://install.python-poetry.org | python3 -

|

curl -sSL https://install.python-poetry.org | python3 -

|

||||||

|

|

||||||

# 通过poetry安装依赖

|

|

||||||

poetry install

|

|

||||||

```

|

```

|

||||||

|

|

||||||

你也可以通过 pip 来安装依赖:

|

通过 Poetry 安装依赖时,python 建议使用 3.7-3.10 版本,其余版本在安装 llvmlite==0.39.0 时会出现冲突

|

||||||

```bash

|

```bash

|

||||||

N卡:

|

poetry init -n

|

||||||

pip install -r requirements.txt

|

poetry env use "path to your python.exe"

|

||||||

|

poetry run pip install -r requirments.txt

|

||||||

A卡/I卡:

|

|

||||||

pip install -r requirements-dml.txt

|

|

||||||

|

|

||||||

A卡Rocm(Linux):

|

|

||||||

pip install -r requirements-amd.txt

|

|

||||||

|

|

||||||

I卡IPEX(Linux):

|

|

||||||

pip install -r requirements-ipex.txt

|

|

||||||

```

|

```

|

||||||

|

|

||||||

------

|

### MacOS

|

||||||

Mac 用户可以通过 `run.sh` 来安装依赖:

|

可以通过 `run.sh` 来安装依赖

|

||||||

```bash

|

```bash

|

||||||

sh ./run.sh

|

sh ./run.sh

|

||||||

```

|

```

|

||||||

@ -97,48 +112,48 @@ RVC需要其他一些预模型来推理和训练。

|

|||||||

|

|

||||||

你可以从我们的[Hugging Face space](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)下载到这些模型。

|

你可以从我们的[Hugging Face space](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)下载到这些模型。

|

||||||

|

|

||||||

以下是一份清单,包括了所有RVC所需的预模型和其他文件的名称:

|

### 1. 下载 assets

|

||||||

|

以下是一份清单,包括了所有RVC所需的预模型和其他文件的名称。你可以在`tools`文件夹找到下载它们的脚本。

|

||||||

|

|

||||||

|

- ./assets/hubert/hubert_base.pt

|

||||||

|

|

||||||

|

- ./assets/pretrained

|

||||||

|

|

||||||

|

- ./assets/uvr5_weights

|

||||||

|

|

||||||

|

想使用v2版本模型的话,需要额外下载

|

||||||

|

|

||||||

|

- ./assets/pretrained_v2

|

||||||

|

|

||||||

|

### 2. 安装 ffmpeg

|

||||||

|

若ffmpeg和ffprobe已安装则跳过。

|

||||||

|

|

||||||

|

#### Ubuntu/Debian 用户

|

||||||

```bash

|

```bash

|

||||||

./assets/hubert/hubert_base.pt

|

sudo apt install ffmpeg

|

||||||

|

|

||||||

./assets/pretrained

|

|

||||||

|

|

||||||

./assets/uvr5_weights

|

|

||||||

|

|

||||||

想测试v2版本模型的话,需要额外下载

|

|

||||||

|

|

||||||

./assets/pretrained_v2

|

|

||||||

|

|

||||||

如果你正在使用Windows,则你可能需要这个文件,若ffmpeg和ffprobe已安装则跳过; ubuntu/debian 用户可以通过apt install ffmpeg来安装这2个库, Mac 用户则可以通过brew install ffmpeg来安装 (需要预先安装brew)

|

|

||||||

|

|

||||||

./ffmpeg

|

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe

|

|

||||||

|

|

||||||

./ffprobe

|

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe

|

|

||||||

|

|

||||||

如果你想使用最新的RMVPE人声音高提取算法,则你需要下载音高提取模型参数并放置于RVC根目录

|

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

|

||||||

|

|

||||||

A卡I卡用户需要的dml环境要请下载

|

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.onnx

|

|

||||||

|

|

||||||

```

|

```

|

||||||

之后使用以下指令来启动WebUI:

|

#### MacOS 用户

|

||||||

```bash

|

```bash

|

||||||

python infer-web.py

|

brew install ffmpeg

|

||||||

```

|

```

|

||||||

如果你正在使用Windows 或 macOS,你可以直接下载并解压`RVC-beta.7z`,前者可以运行`go-web.bat`以启动WebUI,后者则运行命令`sh ./run.sh`以启动WebUI。

|

#### Windows 用户

|

||||||

|

下载后放置在根目录。

|

||||||

|

- 下载[ffmpeg.exe](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe)

|

||||||

|

|

||||||

对于需要使用IPEX技术的I卡用户,请先在终端执行`source /opt/intel/oneapi/setvars.sh`(仅Linux)。

|

- 下载[ffprobe.exe](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe)

|

||||||

|

|

||||||

仓库内还有一份`小白简易教程.doc`以供参考。

|

### 3. 下载 rmvpe 人声音高提取算法所需文件

|

||||||

|

|

||||||

|

如果你想使用最新的RMVPE人声音高提取算法,则你需要下载音高提取模型参数并放置于RVC根目录。

|

||||||

|

|

||||||

|

- 下载[rmvpe.pt](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt)

|

||||||

|

|

||||||

|

#### 下载 rmvpe 的 dml 环境(可选, A卡/I卡用户)

|

||||||

|

|

||||||

|

- 下载[rmvpe.onnx](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.onnx)

|

||||||

|

|

||||||

|

### 4. AMD显卡Rocm(可选, 仅Linux)

|

||||||

|

|

||||||

## AMD显卡Rocm相关(仅Linux)

|

|

||||||

如果你想基于AMD的Rocm技术在Linux系统上运行RVC,请先在[这里](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html)安装所需的驱动。

|

如果你想基于AMD的Rocm技术在Linux系统上运行RVC,请先在[这里](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html)安装所需的驱动。

|

||||||

|

|

||||||

若你使用的是Arch Linux,可以使用pacman来安装所需驱动:

|

若你使用的是Arch Linux,可以使用pacman来安装所需驱动:

|

||||||

@ -155,11 +170,32 @@ export HSA_OVERRIDE_GFX_VERSION=10.3.0

|

|||||||

sudo usermod -aG render $USERNAME

|

sudo usermod -aG render $USERNAME

|

||||||

sudo usermod -aG video $USERNAME

|

sudo usermod -aG video $USERNAME

|

||||||

````

|

````

|

||||||

之后运行WebUI:

|

|

||||||

|

## 开始使用

|

||||||

|

### 直接启动

|

||||||

|

使用以下指令来启动 WebUI

|

||||||

```bash

|

```bash

|

||||||

python infer-web.py

|

python infer-web.py

|

||||||

```

|

```

|

||||||

|

|

||||||

|

若先前使用 Poetry 安装依赖,则可以通过以下方式启动WebUI

|

||||||

|

```bash

|

||||||

|

poetry run python infer-web.py

|

||||||

|

```

|

||||||

|

|

||||||

|

### 使用整合包

|

||||||

|

下载并解压`RVC-beta.7z`

|

||||||

|

#### Windows 用户

|

||||||

|

双击`go-web.bat`

|

||||||

|

#### MacOS 用户

|

||||||

|

```bash

|

||||||

|

sh ./run.sh

|

||||||

|

```

|

||||||

|

### 对于需要使用IPEX技术的I卡用户(仅Linux)

|

||||||

|

```bash

|

||||||

|

source /opt/intel/oneapi/setvars.sh

|

||||||

|

```

|

||||||

|

|

||||||

## 参考项目

|

## 参考项目

|

||||||

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

||||||

+ [VITS](https://github.com/jaywalnut310/vits)

|

+ [VITS](https://github.com/jaywalnut310/vits)

|

||||||

|

|||||||

@ -1 +1 @@

|

|||||||

{"pth_path": "assets/weights/kikiV1.pth", "index_path": "logs/kikiV1.index", "sg_hostapi": "MME", "sg_wasapi_exclusive": false, "sg_input_device": "VoiceMeeter Output (VB-Audio Vo", "sg_output_device": "VoiceMeeter Input (VB-Audio Voi", "sr_type": "sr_device", "threhold": -60.0, "pitch": 12.0, "rms_mix_rate": 0.5, "index_rate": 0.0, "block_time": 0.15, "crossfade_length": 0.08, "extra_time": 2.0, "n_cpu": 4.0, "use_jit": false, "use_pv": false, "f0method": "fcpe"}

|

{"pth_path": "assets/weights/kikiV1.pth", "index_path": "logs/kikiV1.index", "sg_hostapi": "MME", "sg_wasapi_exclusive": false, "sg_input_device": "VoiceMeeter Output (VB-Audio Vo", "sg_output_device": "VoiceMeeter Input (VB-Audio Voi", "sr_type": "sr_device", "threhold": -60.0, "pitch": 12.0, "formant": 0.0, "rms_mix_rate": 0.5, "index_rate": 0.0, "block_time": 0.15, "crossfade_length": 0.08, "extra_time": 2.0, "n_cpu": 4.0, "use_jit": false, "use_pv": false, "f0method": "fcpe"}

|

||||||

@ -14,44 +14,52 @@ An easy-to-use Voice Conversion framework based on VITS.<br><br>

|

|||||||

|

|

||||||

[](https://discord.gg/HcsmBBGyVk)

|

[](https://discord.gg/HcsmBBGyVk)

|

||||||

|

|

||||||

|

[**Changelog**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/Changelog_EN.md) | [**FAQ (Frequently Asked Questions)**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/FAQ-(Frequently-Asked-Questions))

|

||||||

|

|

||||||

|

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Français**](../fr/README.fr.md) | [**Türkçe**](../tr/README.tr.md) | [**Português**](../pt/README.pt.md)

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

------

|

> Check out our [Demo Video](https://www.bilibili.com/video/BV1pm4y1z7Gm/) here!

|

||||||

[**Changelog**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/en/Changelog_EN.md) | [**FAQ (Frequently Asked Questions)**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/FAQ-(Frequently-Asked-Questions))

|

|

||||||

|

|

||||||

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Türkçe**](../tr/README.tr.md)

|

<table>

|

||||||

|

<tr>

|

||||||

|

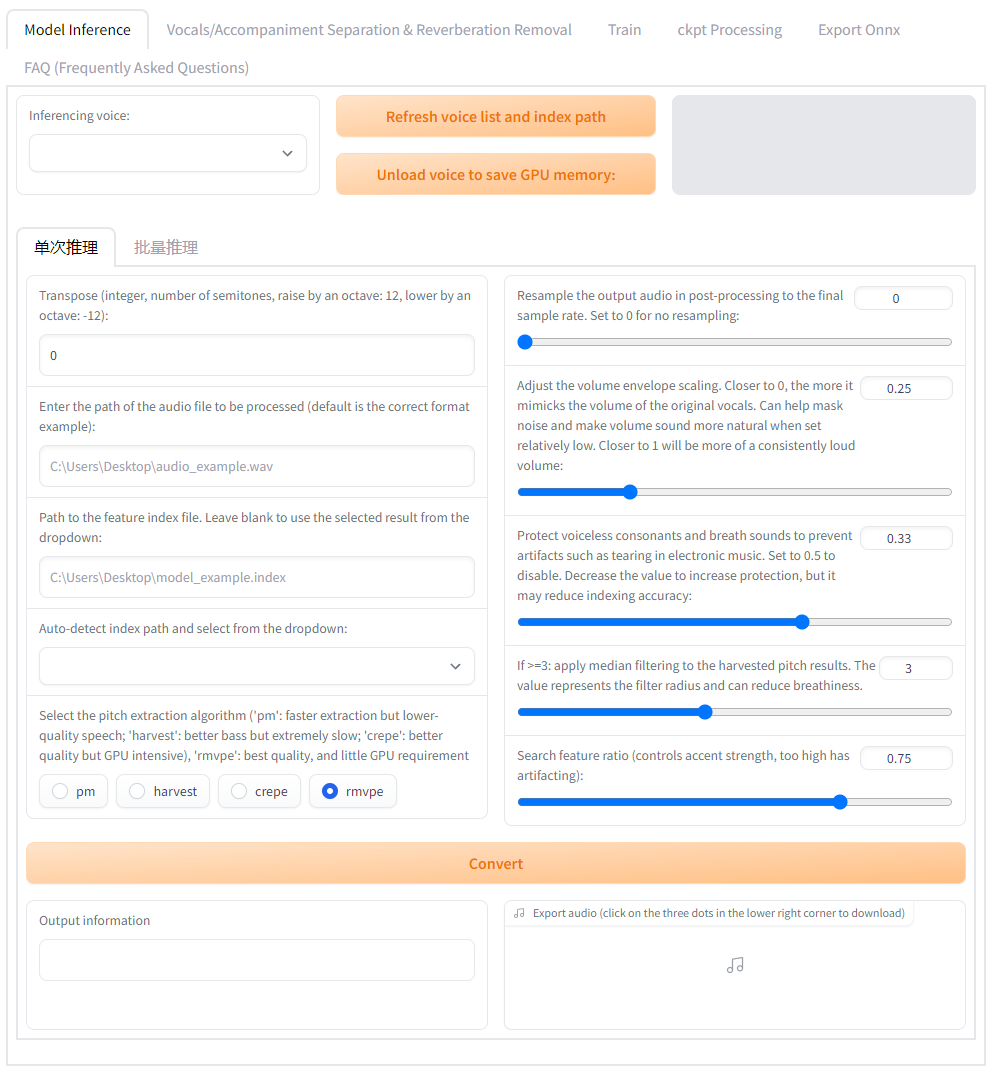

<td align="center">Training and inference Webui</td>

|

||||||

|

<td align="center">Real-time voice changing GUI</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/092e5c12-0d49-4168-a590-0b0ef6a4f630"></td>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/730b4114-8805-44a1-ab1a-04668f3c30a6"></td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">go-web.bat</td>

|

||||||

|

<td align="center">go-realtime-gui.bat</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">You can freely choose the action you want to perform.</td>

|

||||||

|

<td align="center">We have achieved an end-to-end latency of 170ms. With the use of ASIO input and output devices, we have managed to achieve an end-to-end latency of 90ms, but it is highly dependent on hardware driver support.</td>

|

||||||

|

</tr>

|

||||||

|

</table>

|

||||||

|

|

||||||

|

> The dataset for the pre-training model uses nearly 50 hours of high quality audio from the VCTK open source dataset.

|

||||||

|

|

||||||

Check our [Demo Video](https://www.bilibili.com/video/BV1pm4y1z7Gm/) here!

|

> High quality licensed song datasets will be added to the training-set often for your use, without having to worry about copyright infringement.

|

||||||

|

|

||||||

Training/Inference WebUI:go-web.bat

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Realtime Voice Conversion GUI:go-realtime-gui.bat

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

> The dataset for the pre-training model uses nearly 50 hours of high quality VCTK open source dataset.

|

|

||||||

|

|

||||||

> High quality licensed song datasets will be added to training-set one after another for your use, without worrying about copyright infringement.

|

|

||||||

|

|

||||||

> Please look forward to the pretrained base model of RVCv3, which has larger parameters, more training data, better results, unchanged inference speed, and requires less training data for training.

|

> Please look forward to the pretrained base model of RVCv3, which has larger parameters, more training data, better results, unchanged inference speed, and requires less training data for training.

|

||||||

|

|

||||||

## Summary

|

## Features:

|

||||||

This repository has the following features:

|

|

||||||

+ Reduce tone leakage by replacing the source feature to training-set feature using top1 retrieval;

|

+ Reduce tone leakage by replacing the source feature to training-set feature using top1 retrieval;

|

||||||

+ Easy and fast training, even on relatively poor graphics cards;

|

+ Easy + fast training, even on poor graphics cards;

|

||||||

+ Training with a small amount of data also obtains relatively good results (>=10min low noise speech recommended);

|

+ Training with a small amounts of data (>=10min low noise speech recommended);

|

||||||

+ Supporting model fusion to change timbres (using ckpt processing tab->ckpt merge);

|

+ Model fusion to change timbres (using ckpt processing tab->ckpt merge);

|

||||||

+ Easy-to-use Webui interface;

|

+ Easy-to-use WebUI;

|

||||||

+ Use the UVR5 model to quickly separate vocals and instruments.

|

+ UVR5 model to quickly separate vocals and instruments;

|

||||||

+ Use the most powerful High-pitch Voice Extraction Algorithm [InterSpeech2023-RMVPE](#Credits) to prevent the muted sound problem. Provides the best results (significantly) and is faster, with even lower resource consumption than Crepe_full.

|

+ High-pitch Voice Extraction Algorithm [InterSpeech2023-RMVPE](#Credits) to prevent a muted sound problem. Provides the best results (significantly) and is faster with lower resource consumption than Crepe_full;

|

||||||

+ AMD/Intel graphics cards acceleration supported.

|

+ AMD/Intel graphics cards acceleration supported;

|

||||||

+ Intel ARC graphics cards acceleration with IPEX supported.

|

+ Intel ARC graphics cards acceleration with IPEX supported.

|

||||||

|

|

||||||

## Preparing the environment

|

## Preparing the environment

|

||||||

The following commands need to be executed in the environment of Python version 3.8 or higher.

|

The following commands need to be executed with Python 3.8 or higher.

|

||||||

|

|

||||||

(Windows/Linux)

|

(Windows/Linux)

|

||||||

First install the main dependencies through pip:

|

First install the main dependencies through pip:

|

||||||

@ -125,15 +133,6 @@ If you want to test the v2 version model (the v2 version model has changed the i

|

|||||||

|

|

||||||

./assets/pretrained_v2

|

./assets/pretrained_v2

|

||||||

|

|

||||||

#If you are using Windows, you may also need these two files, skip if FFmpeg and FFprobe are installed

|

|

||||||

ffmpeg.exe

|

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe

|

|

||||||

|

|

||||||

ffprobe.exe

|

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe

|

|

||||||

|

|

||||||

If you want to use the latest SOTA RMVPE vocal pitch extraction algorithm, you need to download the RMVPE weights and place them in the RVC root directory

|

If you want to use the latest SOTA RMVPE vocal pitch extraction algorithm, you need to download the RMVPE weights and place them in the RVC root directory

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

||||||

@ -144,14 +143,22 @@ https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

|||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

Intel ARC graphics cards users needs to run `source /opt/intel/oneapi/setvars.sh` command before starting Webui.

|

### 2. Install FFmpeg

|

||||||

|

If you have FFmpeg and FFprobe installed on your computer, you can skip this step.

|

||||||

|

|

||||||

Then use this command to start Webui:

|

#### For Ubuntu/Debian users

|

||||||

```bash

|

```bash

|

||||||

python infer-web.py

|

sudo apt install ffmpeg

|

||||||

```

|

```

|

||||||

|

#### For MacOS users

|

||||||

|

```bash

|

||||||

|

brew install ffmpeg

|

||||||

|

```

|

||||||

|

#### For Windwos users

|

||||||

|

Download these files and place them in the root folder:

|

||||||

|

- [ffmpeg.exe](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe)

|

||||||

|

|

||||||

If you are using Windows or macOS, you can download and extract `RVC-beta.7z` to use RVC directly by using `go-web.bat` on windows or `sh ./run.sh` on macOS to start Webui.

|

- [ffprobe.exe](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe)

|

||||||

|

|

||||||

## ROCm Support for AMD graphic cards (Linux only)

|

## ROCm Support for AMD graphic cards (Linux only)

|

||||||

To use ROCm on Linux install all required drivers as described [here](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html).

|

To use ROCm on Linux install all required drivers as described [here](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html).

|

||||||

@ -166,16 +173,30 @@ You might also need to set these environment variables (e.g. on a RX6700XT):

|

|||||||

export ROCM_PATH=/opt/rocm

|

export ROCM_PATH=/opt/rocm

|

||||||

export HSA_OVERRIDE_GFX_VERSION=10.3.0

|

export HSA_OVERRIDE_GFX_VERSION=10.3.0

|

||||||

````

|

````

|

||||||

Also make sure your user is part of the `render` and `video` group:

|

Make sure your user is part of the `render` and `video` group:

|

||||||

````

|

````

|

||||||

sudo usermod -aG render $USERNAME

|

sudo usermod -aG render $USERNAME

|

||||||

sudo usermod -aG video $USERNAME

|

sudo usermod -aG video $USERNAME

|

||||||

````

|

````

|

||||||

After that you can run the WebUI:

|

|

||||||

|

## Get started

|

||||||

|

### start up directly

|

||||||

|

Use the following command to start WebUI:

|

||||||

```bash

|

```bash

|

||||||

python infer-web.py

|

python infer-web.py

|

||||||

```

|

```

|

||||||

|

### Use the integration package

|

||||||

|

Download and extract file `RVC-beta.7z`, then follow the steps below according to your system:

|

||||||

|

#### For Windows users

|

||||||

|

双击`go-web.bat`

|

||||||

|

#### For MacOS users

|

||||||

|

```bash

|

||||||

|

sh ./run.sh

|

||||||

|

```

|

||||||

|

### For Intel IPEX users (Linux Only)

|

||||||

|

```bash

|

||||||

|

source /opt/intel/oneapi/setvars.sh

|

||||||

|

```

|

||||||

## Credits

|

## Credits

|

||||||

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

||||||

+ [VITS](https://github.com/jaywalnut310/vits)

|

+ [VITS](https://github.com/jaywalnut310/vits)

|

||||||

|

|||||||

@ -14,13 +14,13 @@ Un framework simple et facile à utiliser pour la conversion vocale (modificateu

|

|||||||

|

|

||||||

[](https://discord.gg/HcsmBBGyVk)

|

[](https://discord.gg/HcsmBBGyVk)

|

||||||

|

|

||||||

[**Journal de mise à jour**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/cn/Changelog_CN.md) | [**FAQ**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98%E8%A7%A3%E7%AD%94) | [**AutoDL·Formation d'un chanteur AI pour 5 centimes**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B) | [**Enregistrement des expériences comparatives**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%AF%B9%E7%85%A7%E5%AE%9E%E9%AA%8C%C2%B7%E5%AE%9E%E9%AA%8C%E8%AE%B0%E5%BD%95)) | [**Démonstration en ligne**](https://huggingface.co/spaces/Ricecake123/RVC-demo)

|

[**Journal de mise à jour**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/Changelog_CN.md) | [**FAQ**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98%E8%A7%A3%E7%AD%94) | [**AutoDL·Formation d'un chanteur AI pour 5 centimes**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B) | [**Enregistrement des expériences comparatives**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%AF%B9%E7%85%A7%E5%AE%9E%E9%AA%8C%C2%B7%E5%AE%9E%E9%AA%8C%E8%AE%B0%E5%BD%95) | [**Démonstration en ligne**](https://huggingface.co/spaces/Ricecake123/RVC-demo)

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

------

|

------

|

||||||

|

|

||||||

[**English**](./docs/en/README.en.md) |[ **中文简体**](./docs/cn/README.md) | [**日本語**](./docs/jp/README.ja.md) | [**한국어**](./docs/kr/README.ko.md) ([**韓國語**](./docs/kr/README.ko.han.md)) | [**Turc**](./docs/tr/README.tr.md)

|

[**English**](../en/README.en.md) | [ **中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Français**](../fr/README.fr.md) | [**Turc**](../tr/README.tr.md) | [**Português**](../pt/README.pt.md)

|

||||||

|

|

||||||

Cliquez ici pour voir notre [vidéo de démonstration](https://www.bilibili.com/video/BV1pm4y1z7Gm/) !

|

Cliquez ici pour voir notre [vidéo de démonstration](https://www.bilibili.com/video/BV1pm4y1z7Gm/) !

|

||||||

|

|

||||||

@ -39,10 +39,10 @@ Ce dépôt a les caractéristiques suivantes :

|

|||||||

+ Interface web simple et facile à utiliser.

|

+ Interface web simple et facile à utiliser.

|

||||||

+ Peut appeler le modèle UVR5 pour séparer rapidement la voix et l'accompagnement.

|

+ Peut appeler le modèle UVR5 pour séparer rapidement la voix et l'accompagnement.

|

||||||

+ Utilise l'algorithme de pitch vocal le plus avancé [InterSpeech2023-RMVPE](#projets-référencés) pour éliminer les problèmes de voix muette. Meilleurs résultats, plus rapide que crepe_full, et moins gourmand en ressources.

|

+ Utilise l'algorithme de pitch vocal le plus avancé [InterSpeech2023-RMVPE](#projets-référencés) pour éliminer les problèmes de voix muette. Meilleurs résultats, plus rapide que crepe_full, et moins gourmand en ressources.

|

||||||

+ Support d'accélération pour les cartes A et I.

|

+ Support d'accélération pour les cartes AMD et Intel.

|

||||||

|

|

||||||

## Configuration de l'environnement

|

## Configuration de l'environnement

|

||||||

Exécutez les commandes suivantes dans un environnement Python de version supérieure à 3.8.

|

Exécutez les commandes suivantes dans un environnement Python de version 3.8 ou supérieure.

|

||||||

|

|

||||||

(Windows/Linux)

|

(Windows/Linux)

|

||||||

Installez d'abord les dépendances principales via pip :

|

Installez d'abord les dépendances principales via pip :

|

||||||

@ -52,7 +52,10 @@ Installez d'abord les dépendances principales via pip :

|

|||||||

pip install torch torchvision torchaudio

|

pip install torch torchvision torchaudio

|

||||||

|

|

||||||

# Pour les utilisateurs de Windows avec une architecture Nvidia Ampere (RTX30xx), en se basant sur l'expérience #21, spécifiez la version CUDA correspondante pour Pytorch.

|

# Pour les utilisateurs de Windows avec une architecture Nvidia Ampere (RTX30xx), en se basant sur l'expérience #21, spécifiez la version CUDA correspondante pour Pytorch.

|

||||||

# pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

||||||

|

|

||||||

|

# Pour Linux + carte AMD, utilisez cette version de Pytorch:

|

||||||

|

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/rocm5.4.2

|

||||||

```

|

```

|

||||||

|

|

||||||

Vous pouvez utiliser poetry pour installer les dépendances :

|

Vous pouvez utiliser poetry pour installer les dépendances :

|

||||||

@ -67,15 +70,17 @@ poetry install

|

|||||||

|

|

||||||

Ou vous pouvez utiliser pip pour installer les dépendances :

|

Ou vous pouvez utiliser pip pour installer les dépendances :

|

||||||

```bash

|

```bash

|

||||||

Cartes Nvidia :

|

# Cartes Nvidia :

|

||||||

|

|

||||||

pip install -r requirements.txt

|

pip install -r requirements.txt

|

||||||

|

|

||||||

Cartes AMD/Intel :

|

# Cartes AMD/Intel :

|

||||||

pip install -

|

pip install -r requirements-dml.txt

|

||||||

|

|

||||||

r requirements-dml.txt

|

# Cartes Intel avec IPEX

|

||||||

|

pip install -r requirements-ipex.txt

|

||||||

|

|

||||||

|

# Cartes AMD sur Linux (ROCm)

|

||||||

|

pip install -r requirements-amd.txt

|

||||||

```

|

```

|

||||||

|

|

||||||

------

|

------

|

||||||

@ -87,7 +92,12 @@ sh ./run.sh

|

|||||||

## Préparation d'autres modèles pré-entraînés

|

## Préparation d'autres modèles pré-entraînés

|

||||||

RVC nécessite d'autres modèles pré-entraînés pour l'inférence et la formation.

|

RVC nécessite d'autres modèles pré-entraînés pour l'inférence et la formation.

|

||||||

|

|

||||||

Vous pouvez télécharger ces modèles depuis notre [espace Hugging Face](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/).

|

```bash

|

||||||

|

#Télécharger tous les modèles depuis https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/

|

||||||

|

python tools/download_models.py

|

||||||

|

```

|

||||||

|

|

||||||

|

Ou vous pouvez télécharger ces modèles depuis notre [espace Hugging Face](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/).

|

||||||

|

|

||||||

Voici une liste des modèles et autres fichiers requis par RVC :

|

Voici une liste des modèles et autres fichiers requis par RVC :

|

||||||

```bash

|

```bash

|

||||||

@ -97,29 +107,30 @@ Voici une liste des modèles et autres fichiers requis par RVC :

|

|||||||

|

|

||||||

./assets/uvr5_weights

|

./assets/uvr5_weights

|

||||||

|

|

||||||

Pour tester la version v2 du modèle, téléchargez également :

|

# Pour tester la version v2 du modèle, téléchargez également :

|

||||||

|

|

||||||

./assets/pretrained_v2

|

./assets/pretrained_v2

|

||||||

|

|

||||||

Si vous utilisez Windows, vous pourriez avoir besoin de ces fichiers pour ffmpeg et ffprobe, sautez cette étape si vous avez déjà installé ffmpeg et ffprobe. Les utilisateurs d'ubuntu/debian peuvent installer ces deux bibliothèques avec apt install ffmpeg. Les utilisateurs de Mac peuvent les installer avec brew install ffmpeg (prérequis : avoir installé brew).

|

# Si vous utilisez Windows, vous pourriez avoir besoin de ces fichiers pour ffmpeg et ffprobe, sautez cette étape si vous avez déjà installé ffmpeg et ffprobe. Les utilisateurs d'ubuntu/debian peuvent installer ces deux bibliothèques avec apt install ffmpeg. Les utilisateurs de Mac peuvent les installer avec brew install ffmpeg (prérequis : avoir installé brew).

|

||||||

|

|

||||||

./ffmpeg

|

# ./ffmpeg

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe

|

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe

|

||||||

|

|

||||||

./ffprobe

|

# ./ffprobe

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe

|

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe

|

||||||

|

|

||||||

Si vous souhaitez utiliser le dernier algorithme RMVPE de pitch vocal, téléchargez les paramètres du modèle de pitch et placez-les dans le répertoire racine de RVC.

|

# Si vous souhaitez utiliser le dernier algorithme RMVPE de pitch vocal, téléchargez les paramètres du modèle de pitch et placez-les dans le répertoire racine de RVC.

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

||||||

|

|

||||||

Les utilisateurs de cartes AMD/Intel nécessitant l'environnement DML doivent télécharger :

|

# Les utilisateurs de cartes AMD/Intel nécessitant l'environnement DML doivent télécharger :

|

||||||

|

|

||||||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.onnx

|

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.onnx

|

||||||

|

|

||||||

```

|

```

|

||||||

|

Pour les utilisateurs d'Intel ARC avec IPEX, exécutez d'abord `source /opt/intel/oneapi/setvars.sh`.

|

||||||

Ensuite, exécutez la commande suivante pour démarrer WebUI :

|

Ensuite, exécutez la commande suivante pour démarrer WebUI :

|

||||||

```bash

|

```bash

|

||||||

python infer-web.py

|

python infer-web.py

|

||||||

@ -127,7 +138,28 @@ python infer-web.py

|

|||||||

|

|

||||||

Si vous utilisez Windows ou macOS, vous pouvez télécharger et extraire `RVC-beta.7z`. Les utilisateurs de Windows peuvent exécuter `go-web.bat` pour démarrer WebUI, tandis que les utilisateurs de macOS peuvent exécuter `sh ./run.sh`.

|

Si vous utilisez Windows ou macOS, vous pouvez télécharger et extraire `RVC-beta.7z`. Les utilisateurs de Windows peuvent exécuter `go-web.bat` pour démarrer WebUI, tandis que les utilisateurs de macOS peuvent exécuter `sh ./run.sh`.

|

||||||

|

|

||||||

Il y a également un `Guide facile pour les débutants.doc` inclus pour référence.

|

## Compatibilité ROCm pour les cartes AMD (seulement Linux)

|

||||||

|

Installez tous les pilotes décrits [ici](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html).

|

||||||

|

|

||||||

|

Sur Arch utilisez pacman pour installer le pilote:

|

||||||

|

````

|

||||||

|

pacman -S rocm-hip-sdk rocm-opencl-sdk

|

||||||

|

````

|

||||||

|

|

||||||

|

Vous devrez peut-être créer ces variables d'environnement (par exemple avec RX6700XT):

|

||||||

|

````

|

||||||

|

export ROCM_PATH=/opt/rocm

|

||||||

|

export HSA_OVERRIDE_GFX_VERSION=10.3.0

|

||||||

|

````

|

||||||

|

Assurez-vous que votre utilisateur est dans les groupes `render` et `video`:

|

||||||

|

````

|

||||||

|

sudo usermod -aG render $USERNAME

|

||||||

|

sudo usermod -aG video $USERNAME

|

||||||

|

````

|

||||||

|

Enfin vous pouvez exécuter WebUI:

|

||||||

|

```bash

|

||||||

|

python infer-web.py

|

||||||

|

```

|

||||||

|

|

||||||

## Crédits

|

## Crédits

|

||||||

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

||||||

|

|||||||

@ -3,107 +3,255 @@

|

|||||||

<h1>Retrieval-based-Voice-Conversion-WebUI</h1>

|

<h1>Retrieval-based-Voice-Conversion-WebUI</h1>

|

||||||

VITSに基づく使いやすい音声変換(voice changer)framework<br><br>

|

VITSに基づく使いやすい音声変換(voice changer)framework<br><br>

|

||||||

|

|

||||||

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI)

|

||||||

)](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI)

|

|

||||||

|

|

||||||

<img src="https://counter.seku.su/cmoe?name=rvc&theme=r34" /><br>

|

<img src="https://counter.seku.su/cmoe?name=rvc&theme=r34" /><br>

|

||||||

|

|

||||||

[](https://colab.research.google.com/github/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/Retrieval_based_Voice_Conversion_WebUI.ipynb)

|

[](https://colab.research.google.com/github/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/Retrieval_based_Voice_Conversion_WebUI.ipynb)

|

||||||

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/LICENSE)

|

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/LICENSE)

|

||||||

[](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)

|

[](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)

|

||||||

|

|

||||||

[](https://discord.gg/HcsmBBGyVk)

|

[](https://discord.gg/HcsmBBGyVk)

|

||||||

|

|

||||||

|

[**更新日誌**](./Changelog_JA.md) | [**よくある質問**](./faq_ja.md) | [**AutoDL·5 円で AI 歌手をトレーニング**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B) | [**対照実験記録**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%AF%B9%E7%85%A7%E5%AE%9E%E9%AA%8C%C2%B7%E5%AE%9E%E9%AA%8C%E8%AE%B0%E5%BD%95) | [**オンラインデモ**](https://modelscope.cn/studios/FlowerCry/RVCv2demo)

|

||||||

|

|

||||||

|

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Français**](../fr/README.fr.md) | [**Türkçe**](../tr/README.tr.md) | [**Português**](../pt/README.pt.md)

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

------

|

|

||||||

|

|

||||||

[**更新日誌**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/cn/Changelog_CN.md)

|

|

||||||

|

|

||||||

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Türkçe**](../tr/README.tr.md)

|

|

||||||

|

|

||||||

> デモ動画は[こちら](https://www.bilibili.com/video/BV1pm4y1z7Gm/)でご覧ください。

|

> デモ動画は[こちら](https://www.bilibili.com/video/BV1pm4y1z7Gm/)でご覧ください。

|

||||||

|

|

||||||

> RVCによるリアルタイム音声変換: [w-okada/voice-changer](https://github.com/w-okada/voice-changer)

|

> RVC によるリアルタイム音声変換: [w-okada/voice-changer](https://github.com/w-okada/voice-changer)

|

||||||

|

|

||||||

> 著作権侵害を心配することなく使用できるように、基底モデルは約50時間の高品質なオープンソースデータセットで訓練されています。

|

> 著作権侵害を心配することなく使用できるように、基底モデルは約 50 時間の高品質なオープンソースデータセットで訓練されています。

|

||||||

|

|

||||||

> 今後も、次々と使用許可のある高品質な歌声の資料集を追加し、基底モデルを訓練する予定です。

|

> RVCv3 の基底モデルルをご期待ください。より大きなパラメータ、より大きなデータ、より良い効果を提供し、基本的に同様の推論速度を維持しながら、トレーニングに必要なデータ量はより少なくなります。

|

||||||

|

|

||||||

|

<table>

|

||||||

|

<tr>

|

||||||

|

<td align="center">トレーニングと推論インターフェース</td>

|

||||||

|

<td align="center">リアルタイム音声変換インターフェース</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/092e5c12-0d49-4168-a590-0b0ef6a4f630"></td>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/730b4114-8805-44a1-ab1a-04668f3c30a6"></td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">go-web.bat</td>

|

||||||

|

<td align="center">go-realtime-gui.bat</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">実行したい操作を自由に選択できます。</td>

|

||||||

|

<td align="center">既に端から端までの170msの遅延を実現しました。ASIO入出力デバイスを使用すれば、端から端までの90msの遅延を達成できますが、ハードウェアドライバーのサポートに非常に依存しています。</td>

|

||||||

|

</tr>

|

||||||

|

</table>

|

||||||

|

|

||||||

## はじめに

|

## はじめに

|

||||||

|

|

||||||

本リポジトリには下記の特徴があります。

|

本リポジトリには下記の特徴があります。

|

||||||

|

|

||||||

+ Top1検索を用いることで、生の特徴量を訓練用データセット特徴量に変換し、トーンリーケージを削減します。

|

- Top1 検索を用いることで、生の特徴量を訓練用データセット特徴量に変換し、トーンリーケージを削減します。

|

||||||

+ 比較的貧弱なGPUでも、高速かつ簡単に訓練できます。

|

- 比較的貧弱な GPU でも、高速かつ簡単に訓練できます。

|

||||||

+ 少量のデータセットからでも、比較的良い結果を得ることができます。(10分以上のノイズの少ない音声を推奨します。)

|

- 少量のデータセットからでも、比較的良い結果を得ることができます。(10 分以上のノイズの少ない音声を推奨します。)

|

||||||

+ モデルを融合することで、音声を混ぜることができます。(ckpt processingタブの、ckpt mergeを使用します。)

|

- モデルを融合することで、音声を混ぜることができます。(ckpt processing タブの、ckpt merge を使用します。)

|

||||||

+ 使いやすいWebUI。

|

- 使いやすい WebUI。

|

||||||

+ UVR5 Modelも含んでいるため、人の声とBGMを素早く分離できます。

|

- UVR5 Model も含んでいるため、人の声と BGM を素早く分離できます。

|

||||||

|

- 最先端の[人間の声のピッチ抽出アルゴリズム InterSpeech2023-RMVPE](#参照プロジェクト)を使用して無声音問題を解決します。効果は最高(著しく)で、crepe_full よりも速く、リソース使用が少ないです。

|

||||||

|

- A カードと I カードの加速サポート

|

||||||

|

|

||||||

|

私たちの[デモビデオ](https://www.bilibili.com/video/BV1pm4y1z7Gm/)をチェックしてください!

|

||||||

|

|

||||||

## 環境構築

|

## 環境構築

|

||||||

Poetryで依存関係をインストールすることをお勧めします。

|

|

||||||

|

|

||||||

下記のコマンドは、Python3.8以上の環境で実行する必要があります:

|

下記のコマンドは、Python3.8 以上の環境で実行する必要があります:

|

||||||

|

|

||||||

|

### Windows/Linux/MacOS などのプラットフォーム共通方法

|

||||||

|

|

||||||

|

以下の方法のいずれかを選択してください。

|

||||||

|

|

||||||

|

#### 1. pip を通じた依存関係のインストール

|

||||||

|

|

||||||

|

1. Pytorch 及びその主要な依存関係のインストール、すでにインストールされている場合はスキップ。参照:https://pytorch.org/get-started/locally/

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

# PyTorch関連の依存関係をインストール。インストール済の場合は省略。

|

|

||||||

# 参照先: https://pytorch.org/get-started/locally/

|

|

||||||

pip install torch torchvision torchaudio

|

pip install torch torchvision torchaudio

|

||||||

|

|

||||||

#Windows+ Nvidia Ampere Architecture(RTX30xx)の場合、 #21 に従い、pytorchに対応するcuda versionを指定する必要があります。

|

|

||||||

#pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

|

||||||

|

|

||||||

# PyTorch関連の依存関係をインストール。インストール済の場合は省略。

|

|

||||||

# 参照先: https://python-poetry.org/docs/#installation

|

|

||||||

curl -sSL https://install.python-poetry.org | python3 -

|

|

||||||

|

|

||||||

# Poetry経由で依存関係をインストール

|

|

||||||

poetry install

|

|

||||||

```

|

```

|

||||||

|

|

||||||

pipでも依存関係のインストールが可能です:

|

2. win システム + Nvidia Ampere アーキテクチャ(RTX30xx)の場合、#21 の経験に基づいて pytorch に対応する cuda バージョンを指定

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

||||||

|

```

|

||||||

|

|

||||||

|

3. 自分のグラフィックカードに合わせた依存関係のインストール

|

||||||

|

|

||||||

|

- N カード

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

pip install -r requirements.txt

|

pip install -r requirements.txt

|

||||||

```

|

```

|

||||||

|

|

||||||

## 基底modelsを準備

|

- A カード/I カード

|

||||||

RVCは推論/訓練のために、様々な事前訓練を行った基底モデルを必要とします。

|

|

||||||

|

|

||||||

modelsは[Hugging Face space](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)からダウンロードできます。

|

|

||||||

|

|

||||||

以下は、RVCに必要な基底モデルやその他のファイルの一覧です。

|

|

||||||

```bash

|

```bash

|

||||||

./assets/hubert/hubert_base.pt

|

pip install -r requirements-dml.txt

|

||||||

|

|

||||||

./assets/pretrained

|

|

||||||

|

|

||||||

./assets/uvr5_weights

|

|

||||||

|

|

||||||

V2のモデルを使用するには、追加でファイルをダウンロードする必要があります

|

|

||||||

|

|

||||||

./assets/pretrained_v2

|

|

||||||

|

|

||||||

# ffmpegがすでにinstallされている場合は省略

|

|

||||||

./ffmpeg

|

|

||||||

```

|

```

|

||||||

その後、下記のコマンドでWebUIを起動します。

|

|

||||||

|

- A カード ROCM(Linux)

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

|

pip install -r requirements-amd.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

- I カード IPEX(Linux)

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install -r requirements-ipex.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2. poetry を通じた依存関係のインストール

|

||||||

|

|

||||||

|

Poetry 依存関係管理ツールのインストール、すでにインストールされている場合はスキップ。参照:https://python-poetry.org/docs/#installation

|

||||||

|

|

||||||

|

```bash

|

||||||

|

curl -sSL https://install.python-poetry.org | python3 -

|

||||||

|

```

|

||||||

|

|

||||||

|

poetry を使って依存関係をインストール

|

||||||

|

|

||||||

|

```bash

|

||||||

|

poetry install

|

||||||

|

```

|

||||||

|

|

||||||

|

### MacOS

|

||||||

|

|

||||||

|

`run.sh`を使って依存関係をインストールできます

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sh ./run.sh

|

||||||

|

```

|

||||||

|

|

||||||

|

## その他の事前訓練されたモデルの準備

|

||||||

|

|

||||||

|

RVC は推論とトレーニングのために他のいくつかの事前訓練されたモデルが必要です。

|

||||||

|

|

||||||

|

これらのモデルは私たちの[Hugging Face space](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)でダウンロードできます。

|

||||||

|

|

||||||

|

### 1. assets のダウンロード

|

||||||

|

|

||||||

|

以下は、RVC に必要なすべての事前学習モデルとその他のファイルのリストです。`tools`フォルダーにこれらをダウンロードするスクリプトがあります。

|

||||||

|

|

||||||

|

- ./assets/hubert/hubert_base.pt

|

||||||

|

|

||||||

|

- ./assets/pretrained

|

||||||

|

|

||||||

|

- ./assets/uvr5_weights

|

||||||

|

|

||||||

|

v2 バージョンのモデルを使用する場合、追加で以下をダウンロードする必要があります。

|

||||||

|

|

||||||

|

- ./assets/pretrained_v2

|

||||||

|

|

||||||

|

### 2. ffmpeg のインストール

|

||||||

|

|

||||||

|

ffmpeg と ffprobe が既にインストールされている場合はスキップします。

|

||||||

|

|

||||||

|

#### Ubuntu/Debian ユーザー

|

||||||

|

|

||||||

|

```bash

|

||||||

|

sudo apt install ffmpeg

|

||||||

|

```

|

||||||

|

|

||||||

|

#### MacOS ユーザー

|

||||||

|

|

||||||

|

```bash

|

||||||

|

brew install ffmpeg

|

||||||

|

```

|

||||||

|

|

||||||

|

#### Windows ユーザー

|

||||||

|

|

||||||

|

ダウンロード後、ルートディレクトリに配置してください。

|

||||||

|

|

||||||

|

- [ffmpeg.exe をダウンロード](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe)

|

||||||

|

|

||||||

|

- [ffprobe.exe をダウンロード](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe)

|

||||||

|

|

||||||

|

### 3. RMVPE 人間の声のピッチ抽出アルゴリズムに必要なファイルのダウンロード

|

||||||

|

|

||||||

|

最新の RMVPE 人間の声のピッチ抽出アルゴリズムを使用する場合、ピッチ抽出モデルのパラメータをダウンロードして RVC のルートディレクトリに配置する必要があります。

|

||||||

|

|

||||||

|

- [rmvpe.pt をダウンロード](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt)

|

||||||

|

|

||||||

|

#### dml 環境の RMVPE をダウンロード(オプション、A カード/I カードユーザー)

|

||||||

|

|

||||||

|

- [rmvpe.onnx をダウンロード](https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.onnx)

|

||||||

|

|

||||||

|

### 4. AMD グラフィックカード Rocm(オプション、Linux のみ)

|

||||||

|

|

||||||

|

Linux システムで AMD の Rocm 技術をベースに RVC を実行したい場合、[こちら](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html)で必要なドライバーを先にインストールしてください。

|

||||||

|

|

||||||

|

Arch Linux を使用している場合、pacman を使用して必要なドライバーをインストールできます。

|

||||||

|

|

||||||

|

```

|

||||||

|

pacman -S rocm-hip-sdk rocm-opencl-sdk

|

||||||

|

```

|

||||||

|

|

||||||

|

一部のモデルのグラフィックカード(例:RX6700XT)の場合、以下のような環境変数を追加で設定する必要があるかもしれません。

|

||||||

|

|

||||||

|

```

|

||||||

|

export ROCM_PATH=/opt/rocm

|

||||||

|

export HSA_OVERRIDE_GFX_VERSION=10.3.0

|

||||||

|

```

|

||||||

|

|

||||||

|

同時に、現在のユーザーが`render`および`video`ユーザーグループに属していることを確認してください。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo usermod -aG render $USERNAME

|

||||||

|

sudo usermod -aG video $USERNAME

|

||||||

|

```

|

||||||

|

|

||||||

|

## 使用開始

|

||||||

|

|

||||||

|

### 直接起動

|

||||||

|

|

||||||

|

以下のコマンドで WebUI を起動します

|

||||||

|

'''bash

|

||||||

python infer-web.py

|

python infer-web.py

|

||||||

```

|

'''

|

||||||

Windowsをお使いの方は、直接`RVC-beta.7z`をダウンロード後に展開し、`go-web.bat`をクリックすることで、WebUIを起動することができます。(7zipが必要です。)

|

|

||||||

|

|

||||||

また、リポジトリに[小白简易教程.doc](./小白简易教程.doc)がありますので、参考にしてください(中国語版のみ)。

|

### 統合パッケージの使用

|

||||||

|

|

||||||

|

`RVC-beta.7z`をダウンロードして解凍

|

||||||

|

|

||||||

|

#### Windows ユーザー

|

||||||

|

|

||||||

|

`go-web.bat`をダブルクリック

|

||||||

|

|

||||||

|

#### MacOS ユーザー

|

||||||

|

|

||||||

|

'''bash

|

||||||

|

sh ./run.sh

|

||||||

|

'''

|

||||||

|

|

||||||

|

### IPEX 技術が必要な I カードユーザー向け(Linux のみ)

|

||||||

|

|

||||||

|

'''bash

|

||||||

|

source /opt/intel/oneapi/setvars.sh

|

||||||

|

'''

|

||||||

|

|

||||||

## 参考プロジェクト

|

## 参考プロジェクト

|

||||||

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

|

||||||

+ [VITS](https://github.com/jaywalnut310/vits)

|

|

||||||

+ [HIFIGAN](https://github.com/jik876/hifi-gan)

|

|

||||||

+ [Gradio](https://github.com/gradio-app/gradio)

|

|

||||||

+ [FFmpeg](https://github.com/FFmpeg/FFmpeg)

|

|

||||||

+ [Ultimate Vocal Remover](https://github.com/Anjok07/ultimatevocalremovergui)

|

|

||||||

+ [audio-slicer](https://github.com/openvpi/audio-slicer)

|

|

||||||

|

|

||||||

## 貢献者(contributor)の皆様の尽力に感謝します

|

- [ContentVec](https://github.com/auspicious3000/contentvec/)

|

||||||

|

- [VITS](https://github.com/jaywalnut310/vits)

|

||||||

|

- [HIFIGAN](https://github.com/jik876/hifi-gan)

|

||||||

|

- [Gradio](https://github.com/gradio-app/gradio)

|

||||||

|

- [FFmpeg](https://github.com/FFmpeg/FFmpeg)

|

||||||

|

- [Ultimate Vocal Remover](https://github.com/Anjok07/ultimatevocalremovergui)

|

||||||

|

- [audio-slicer](https://github.com/openvpi/audio-slicer)

|

||||||

|

- [Vocal pitch extraction:RMVPE](https://github.com/Dream-High/RMVPE)

|

||||||

|

- 事前訓練されたモデルは[yxlllc](https://github.com/yxlllc/RMVPE)と[RVC-Boss](https://github.com/RVC-Boss)によって訓練され、テストされました。

|

||||||

|

|

||||||

|

## すべての貢献者の努力に感謝します

|

||||||

|

|

||||||

<a href="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/graphs/contributors" target="_blank">

|

<a href="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/graphs/contributors" target="_blank">

|

||||||

<img src="https://contrib.rocks/image?repo=RVC-Project/Retrieval-based-Voice-Conversion-WebUI" />

|

<img src="https://contrib.rocks/image?repo=RVC-Project/Retrieval-based-Voice-Conversion-WebUI" />

|

||||||

</a>

|

</a>

|

||||||

|

|||||||

@ -1,5 +1,22 @@

|

|||||||

### 2023-08-13

|

### 2023년 10월 6일 업데이트

|

||||||

|

|

||||||

|

실시간 음성 변환을 위한 인터페이스인 go-realtime-gui.bat/gui_v1.py를 제작했습니다(사실 이는 이미 존재했었습니다). 이번 업데이트는 주로 실시간 음성 변환 성능을 최적화하는 데 중점을 두었습니다. 0813 버전과 비교하여:

|

||||||

|

|

||||||

|

- 1. 인터페이스 조작 최적화: 매개변수 핫 업데이트(매개변수 조정 시 중단 후 재시작 필요 없음), 모델 지연 로딩(이미 로드된 모델은 재로드 필요 없음), 음량 인자 매개변수 추가(음량을 입력 오디오에 가깝게 조정)

|

||||||

|

- 2. 내장된 노이즈 감소 효과 및 속도 최적화

|

||||||

|

- 3. 추론 속도 크게 향상

|

||||||

|

|

||||||

|

입력 및 출력 장치는 동일한 유형을 선택해야 합니다. 예를 들어, 모두 MME 유형을 선택해야 합니다.

|

||||||

|

|

||||||

|

1006 버전의 전체 업데이트는 다음과 같습니다:

|

||||||

|

|

||||||

|

- 1. rmvpe 음성 피치 추출 알고리즘의 효과를 계속해서 향상, 특히 남성 저음역에 대한 개선이 큼

|

||||||

|

- 2. 추론 인터페이스 레이아웃 최적화

|

||||||

|

|

||||||

|

### 2023년 08월 13일 업데이트

|

||||||

|

|

||||||

1-정기적인 버그 수정

|

1-정기적인 버그 수정

|

||||||

|

|

||||||

- 최소 총 에포크 수를 1로 변경하고, 최소 총 에포크 수를 2로 변경합니다.

|

- 최소 총 에포크 수를 1로 변경하고, 최소 총 에포크 수를 2로 변경합니다.

|

||||||

- 사전 훈련(pre-train) 모델을 사용하지 않는 훈련 오류 수정

|

- 사전 훈련(pre-train) 모델을 사용하지 않는 훈련 오류 수정

|

||||||

- 반주 보컬 분리 후 그래픽 메모리 지우기

|

- 반주 보컬 분리 후 그래픽 메모리 지우기

|

||||||

@ -9,9 +26,10 @@

|

|||||||

- 실시간 음성 변경 중 faiss 검색으로 인한 CPU 소모 문제 해결

|

- 실시간 음성 변경 중 faiss 검색으로 인한 CPU 소모 문제 해결

|

||||||

|

|

||||||

2-키 업데이트

|

2-키 업데이트

|

||||||

|

|

||||||

- 현재 가장 강력한 오픈 소스 보컬 피치 추출 모델 RMVPE를 훈련하고, 이를 RVC 훈련, 오프라인/실시간 추론에 사용하며, PyTorch/Onx/DirectML을 지원합니다.

|

- 현재 가장 강력한 오픈 소스 보컬 피치 추출 모델 RMVPE를 훈련하고, 이를 RVC 훈련, 오프라인/실시간 추론에 사용하며, PyTorch/Onx/DirectML을 지원합니다.

|

||||||

- 파이토치_DML을 통한 AMD 및 인텔 그래픽 카드 지원

|

- 파이토치\_DML을 통한 AMD 및 인텔 그래픽 카드 지원

|

||||||

(1) 실시간 음성 변화 (2) 추론 (3) 보컬 반주 분리 (4) 현재 지원되지 않는 훈련은 CPU 훈련으로 전환, Onnx_Dml을 통한 gpu의 RMVPE 추론 지원

|

(1) 실시간 음성 변화 (2) 추론 (3) 보컬 반주 분리 (4) 현재 지원되지 않는 훈련은 CPU 훈련으로 전환, Onnx_Dml을 통한 gpu의 RMVPE 추론 지원

|

||||||

|

|

||||||

### 2023년 6월 18일 업데이트

|

### 2023년 6월 18일 업데이트

|

||||||

|

|

||||||

|

|||||||

@ -19,7 +19,7 @@ VITS基盤의 簡單하고使用하기 쉬운音聲變換틀<br><br>

|

|||||||

------

|

------

|

||||||

[**更新日誌**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/Changelog_KO.md)

|

[**更新日誌**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/Changelog_KO.md)

|

||||||

|

|

||||||

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Türkçe**](../tr/README.tr.md)

|

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Français**](../fr/README.fr.md) | [**Türkçe**](../tr/README.tr.md) | [**Português**](../pt/README.pt.md)

|

||||||

|

|

||||||

> [示範映像](https://www.bilibili.com/video/BV1pm4y1z7Gm/)을 確認해 보세요!

|

> [示範映像](https://www.bilibili.com/video/BV1pm4y1z7Gm/)을 確認해 보세요!

|

||||||

|

|

||||||

|

|||||||

@ -3,104 +3,243 @@

|

|||||||

<h1>Retrieval-based-Voice-Conversion-WebUI</h1>

|

<h1>Retrieval-based-Voice-Conversion-WebUI</h1>

|

||||||

VITS 기반의 간단하고 사용하기 쉬운 음성 변환 프레임워크.<br><br>

|

VITS 기반의 간단하고 사용하기 쉬운 음성 변환 프레임워크.<br><br>

|

||||||

|

|

||||||

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI)

|

||||||

)](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI)

|

|

||||||

|

|

||||||

<img src="https://counter.seku.su/cmoe?name=rvc&theme=r34" /><br>

|

<img src="https://counter.seku.su/cmoe?name=rvc&theme=r34" /><br>

|

||||||

|

|

||||||

[](https://colab.research.google.com/github/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/Retrieval_based_Voice_Conversion_WebUI.ipynb)

|

[](https://colab.research.google.com/github/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/Retrieval_based_Voice_Conversion_WebUI.ipynb)

|

||||||

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/LICENSE)

|

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/LICENSE)

|

||||||

[](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)

|

[](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)

|

||||||

|

|

||||||

[](https://discord.gg/HcsmBBGyVk)

|

[](https://discord.gg/HcsmBBGyVk)

|

||||||

|

|

||||||

|

[**업데이트 로그**](./Changelog_KO.md) | [**자주 묻는 질문**](./faq_ko.md) | [**AutoDL·5원으로 AI 가수 훈련**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/Autodl%E8%AE%AD%E7%BB%83RVC%C2%B7AI%E6%AD%8C%E6%89%8B%E6%95%99%E7%A8%8B) | [**대조 실험 기록**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/%E5%AF%B9%E7%85%A7%E5%AE%9E%E9%AA%8C%C2%B7%E5%AE%9E%E9%AA%8C%E8%AE%B0%E5%BD%95) | [**온라인 데모**](https://modelscope.cn/studios/FlowerCry/RVCv2demo)

|

||||||

|

|

||||||

|

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Français**](../fr/README.fr.md) | [**Türkçe**](../tr/README.tr.md) | [**Português**](../pt/README.pt.md)

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

[**업데이트 로그**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/kr/Changelog_KO.md)

|

|

||||||

|

|

||||||

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Türkçe**](../tr/README.tr.md)

|

|

||||||

|

|

||||||

> [데모 영상](https://www.bilibili.com/video/BV1pm4y1z7Gm/)을 확인해 보세요!

|

> [데모 영상](https://www.bilibili.com/video/BV1pm4y1z7Gm/)을 확인해 보세요!

|

||||||

|

|

||||||

> RVC를 활용한 실시간 음성변환: [w-okada/voice-changer](https://github.com/w-okada/voice-changer)

|

> RVC를 활용한 실시간 음성변환: [w-okada/voice-changer](https://github.com/w-okada/voice-changer)

|

||||||

|

|

||||||

> 기본 모델은 50시간 가량의 고퀄리티 오픈 소스 VCTK 데이터셋을 사용하였으므로, 저작권상의 염려가 없으니 안심하고 사용하시기 바랍니다.

|

> 기본 모델은 50시간 가량의 고퀄리티 오픈 소스 VCTK 데이터셋을 사용하였으므로, 저작권상의 염려가 없으니 안심하고 사용하시기 바랍니다.

|

||||||

|

|

||||||

> 저작권 문제가 없는 고퀄리티의 노래를 이후에도 계속해서 훈련할 예정입니다.

|

> 더 큰 매개변수, 더 큰 데이터, 더 나은 효과, 기본적으로 동일한 추론 속도, 더 적은 양의 훈련 데이터가 필요한 RVCv3의 기본 모델을 기대해 주십시오.

|

||||||

|

|

||||||

|

<table>

|

||||||

|

<tr>

|

||||||

|

<td align="center">훈련 및 추론 인터페이스</td>

|

||||||

|

<td align="center">실시간 음성 변환 인터페이스</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/092e5c12-0d49-4168-a590-0b0ef6a4f630"></td>

|

||||||

|

<td align="center"><img src="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/assets/129054828/730b4114-8805-44a1-ab1a-04668f3c30a6"></td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">go-web.bat</td>

|

||||||

|

<td align="center">go-realtime-gui.bat</td>

|

||||||

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td align="center">원하는 작업을 자유롭게 선택할 수 있습니다.</td>

|

||||||

|

<td align="center">우리는 이미 끝에서 끝까지 170ms의 지연을 실현했습니다. ASIO 입력 및 출력 장치를 사용하면 끝에서 끝까지 90ms의 지연을 달성할 수 있지만, 이는 하드웨어 드라이버 지원에 매우 의존적입니다.</td>

|

||||||

|

</tr>

|

||||||

|

</table>

|

||||||

|

|

||||||

## 소개

|

## 소개

|

||||||

|

|

||||||

본 Repo는 다음과 같은 특징을 가지고 있습니다:

|

본 Repo는 다음과 같은 특징을 가지고 있습니다:

|

||||||

|

|

||||||

- top1 검색을 이용하여 입력 음색 특징을 훈련 세트 음색 특징으로 대체하여 음색의 누출을 방지;

|

- top1 검색을 이용하여 입력 음색 특징을 훈련 세트 음색 특징으로 대체하여 음색의 누출을 방지

|

||||||

- 상대적으로 낮은 성능의 GPU에서도 빠른 훈련 가능;

|

- 상대적으로 낮은 성능의 GPU에서도 빠른 훈련 가능

|

||||||

- 적은 양의 데이터로 훈련해도 좋은 결과를 얻을 수 있음 (최소 10분 이상의 저잡음 음성 데이터를 사용하는 것을 권장);

|

- 적은 양의 데이터로 훈련해도 좋은 결과를 얻을 수 있음 (최소 10분 이상의 저잡음 음성 데이터를 사용하는 것을 권장)

|

||||||

- 모델 융합을 통한 음색의 변조 가능 (ckpt 처리 탭->ckpt 병합 선택);

|

- 모델 융합을 통한 음색의 변조 가능 (ckpt 처리 탭->ckpt 병합 선택)

|

||||||

- 사용하기 쉬운 WebUI (웹 인터페이스);

|

- 사용하기 쉬운 WebUI (웹 인터페이스)

|

||||||

- UVR5 모델을 이용하여 목소리와 배경음악의 빠른 분리;

|

- UVR5 모델을 이용하여 목소리와 배경음악의 빠른 분리;

|

||||||

|

- 최첨단 [음성 피치 추출 알고리즘 InterSpeech2023-RMVPE](#参考项目)을 사용하여 무성음 문제를 해결합니다. 효과는 최고(압도적)이며 crepe_full보다 더 빠르고 리소스 사용이 적음

|

||||||

|

- A카드와 I카드 가속을 지원

|

||||||

|

|

||||||

## 환경의 준비

|

해당 프로젝트의 [데모 비디오](https://www.bilibili.com/video/BV1pm4y1z7Gm/)를 확인해보세요!

|

||||||

|

|

||||||

poetry를 통해 dependecies를 설치하는 것을 권장합니다.

|

## 환경 설정

|

||||||

|

|

||||||

다음 명령은 Python 버전 3.8 이상의 환경에서 실행되어야 합니다:

|

다음 명령은 Python 버전이 3.8 이상인 환경에서 실행해야 합니다.

|

||||||

|

|

||||||

|

### Windows/Linux/MacOS 등 플랫폼 공통 방법

|

||||||

|

|

||||||

|

아래 방법 중 하나를 선택하세요.

|

||||||

|

|

||||||

|

#### 1. pip를 통한 의존성 설치

|

||||||

|

|

||||||

|

1. Pytorch 및 의존성 모듈 설치, 이미 설치되어 있으면 생략. 참조: https://pytorch.org/get-started/locally/

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||