diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

deleted file mode 100644

index 5e395ff..0000000

--- a/.github/ISSUE_TEMPLATE/bug_report.md

+++ /dev/null

@@ -1,28 +0,0 @@

----

-name: Bug report

-about: Report a bug or a feature that is not working how you expect it to

-title: ''

-labels: 'Type: Bug'

-assignees: ''

-

----

-

-**Describe the bug**

-A clear and concise description of what the bug is.

-

-**To Reproduce**

-Steps to reproduce the behavior:

-1. Selected settings: '...'

-2. See error

-

-**Expected behavior**

-A clear and concise description of what you expected to happen.

-

-**Screenshots**

-If applicable, add screenshots to help explain your problem and annotate the images.

-

-**Desktop (please complete the following information):**

- - OS: [e.g. iOS]

-

-**Additional context**

-Add any other context about the problem here.

diff --git a/.github/ISSUE_TEMPLATE/design-improvement.md b/.github/ISSUE_TEMPLATE/design-improvement.md

deleted file mode 100644

index ac6f27d..0000000

--- a/.github/ISSUE_TEMPLATE/design-improvement.md

+++ /dev/null

@@ -1,20 +0,0 @@

----

-name: Design Improvement

-about: Create a report to help us improve the design of the application

-title: "[DESIGN IMPROVEMENT]"

-labels: 'Type: Enhancement'

-assignees: DilanBoskan

-

----

-

-**Describe the flaw**

-A clear and concise description of where the design flaw is to be found

-

-**Expected behavior**

-A desired solution to the flaw.

-

-**Screenshots**

-If applicable, add screenshots to help explain your problem and annotate the images.

-

-**Additional context**

-Add any other context about the problem here.

diff --git a/.github/ISSUE_TEMPLATE/installation-problem.md b/.github/ISSUE_TEMPLATE/installation-problem.md

deleted file mode 100644

index 5da502f..0000000

--- a/.github/ISSUE_TEMPLATE/installation-problem.md

+++ /dev/null

@@ -1,20 +0,0 @@

----

-name: Installation Problem

-about: Create a report to help us identify your problem with the installation

-title: "[INSTALLATION PROBLEM]"

-labels: ''

-assignees: ''

-

----

-

-**To Reproduce**

-On which installation step did you encounter the issue.

-

-**Screenshots**

-Add screenshots to help explain your problem.

-

-**Desktop (please complete the following information):**

- - OS: [e.g. iOS]

-

-**Additional context**

-Add any other context about the problem here.

diff --git a/README.md b/README.md

index 95146fa..897ce5f 100644

--- a/README.md

+++ b/README.md

@@ -1,46 +1,112 @@

-# Ultimate Vocal Remover GUI v5.1.0

- +# Ultimate Vocal Remover GUI v5.2.0

+

+# Ultimate Vocal Remover GUI v5.2.0

+ [](https://github.com/anjok07/ultimatevocalremovergui/releases/latest)

[](https://github.com/anjok07/ultimatevocalremovergui/releases)

## About

-This application is a GUI version of the vocal remover AI created and posted by GitHub user [tsurumeso](https://github.com/tsurumeso). This version also comes with eight high-performance models trained by us. You can find tsurumeso's original command-line version [here](https://github.com/tsurumeso/vocal-remover).

+This application uses state-of-the-art source searation models to remove vocals from audio files. UVR's core developers trained all of the models provided in this package.

-- **The Developers**

- - [Anjok07](https://github.com/anjok07)- Model collaborator & UVR developer.

- - [aufr33](https://github.com/aufr33) - Model collaborator & fellow UVR developer. This project wouldn't be what it is without your help. Thank you for your continued support!

- - [DilanBoskan](https://github.com/DilanBoskan) - Thank you for helping bring the GUI to life! Your contributions to this project are greatly appreciated.

- - [tsurumeso](https://github.com/tsurumeso) - The engineer who authored the original AI code. Thank you for the hard work and dedication you put into the AI code UVR is built on!

+- **Core Developers**

+ - [Anjok07](https://github.com/anjok07)

+ - [aufr33](https://github.com/aufr33)

+

+## Installation

+

+### Windows Installation

+

+This installation bundle contains the UVR interface, Python, PyTorch, and other dependencies needed to run the application effectively. No prerequisites required.

+

+- Please Note:

+ - This installer is intended for those running Windows 10 or higher.

+ - Application functionality for systems running Windows 7 or lower is not guaranteed.

+ - Application functionality for Intel Pentium & Celeron CPUs systems is not guaranteed.

+

+- Download the UVR installer via one of the following mirrors below:

+ - [pCloud Mirror](https://u.pcloud.link/publink/show?code=XZAX8HVZ03lxQbQtyqBLl07bTPaFPm1jUAbX)

+ - [Google Drive Mirror](https://drive.google.com/file/d/1ALH1WB3WjNnRQoPJFIiJHG9uVqH4U50Q/view?usp=sharing)

+

+- **Optional**

+ - The Model Expansion Pack can be downloaded [here]()

+ - Please navigate to the "Updates" tab within the Help Guide provided in the GUI for instructions on installing the Model Expansion pack.

+ - This version of the GUI is fully backward compatible with the v4 models.

+

+### Other Platforms

+

+This application can be run on Mac & Linux by performing a manual install (see the **Manual Developer Installation** section below for more information). Some features may not be available on non-Windows platforms.

+

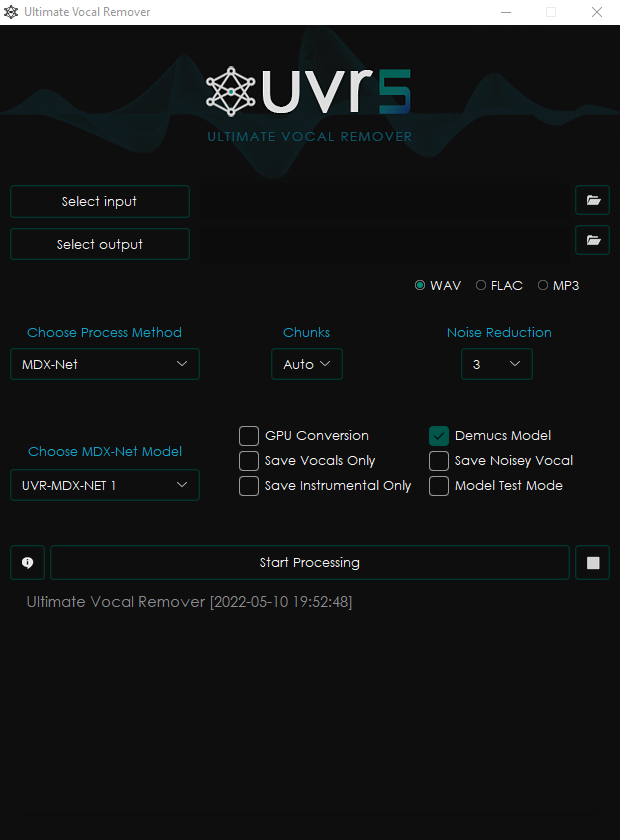

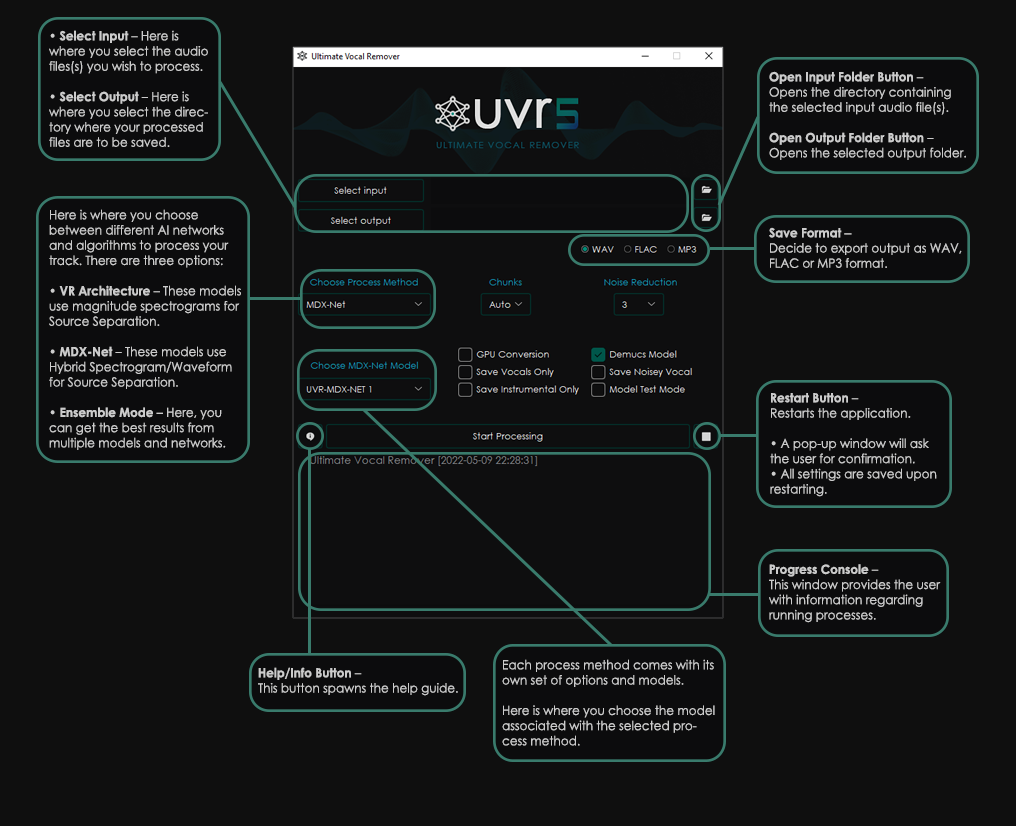

+## Application Manual

+

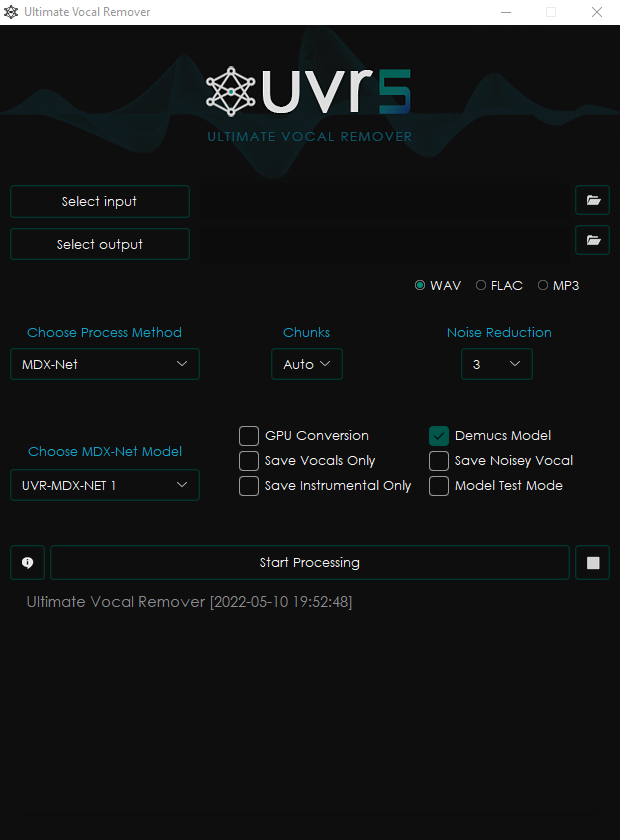

+**General Options**

+

+

[](https://github.com/anjok07/ultimatevocalremovergui/releases/latest)

[](https://github.com/anjok07/ultimatevocalremovergui/releases)

## About

-This application is a GUI version of the vocal remover AI created and posted by GitHub user [tsurumeso](https://github.com/tsurumeso). This version also comes with eight high-performance models trained by us. You can find tsurumeso's original command-line version [here](https://github.com/tsurumeso/vocal-remover).

+This application uses state-of-the-art source searation models to remove vocals from audio files. UVR's core developers trained all of the models provided in this package.

-- **The Developers**

- - [Anjok07](https://github.com/anjok07)- Model collaborator & UVR developer.

- - [aufr33](https://github.com/aufr33) - Model collaborator & fellow UVR developer. This project wouldn't be what it is without your help. Thank you for your continued support!

- - [DilanBoskan](https://github.com/DilanBoskan) - Thank you for helping bring the GUI to life! Your contributions to this project are greatly appreciated.

- - [tsurumeso](https://github.com/tsurumeso) - The engineer who authored the original AI code. Thank you for the hard work and dedication you put into the AI code UVR is built on!

+- **Core Developers**

+ - [Anjok07](https://github.com/anjok07)

+ - [aufr33](https://github.com/aufr33)

+

+## Installation

+

+### Windows Installation

+

+This installation bundle contains the UVR interface, Python, PyTorch, and other dependencies needed to run the application effectively. No prerequisites required.

+

+- Please Note:

+ - This installer is intended for those running Windows 10 or higher.

+ - Application functionality for systems running Windows 7 or lower is not guaranteed.

+ - Application functionality for Intel Pentium & Celeron CPUs systems is not guaranteed.

+

+- Download the UVR installer via one of the following mirrors below:

+ - [pCloud Mirror](https://u.pcloud.link/publink/show?code=XZAX8HVZ03lxQbQtyqBLl07bTPaFPm1jUAbX)

+ - [Google Drive Mirror](https://drive.google.com/file/d/1ALH1WB3WjNnRQoPJFIiJHG9uVqH4U50Q/view?usp=sharing)

+

+- **Optional**

+ - The Model Expansion Pack can be downloaded [here]()

+ - Please navigate to the "Updates" tab within the Help Guide provided in the GUI for instructions on installing the Model Expansion pack.

+ - This version of the GUI is fully backward compatible with the v4 models.

+

+### Other Platforms

+

+This application can be run on Mac & Linux by performing a manual install (see the **Manual Developer Installation** section below for more information). Some features may not be available on non-Windows platforms.

+

+## Application Manual

+

+**General Options**

+

+ +

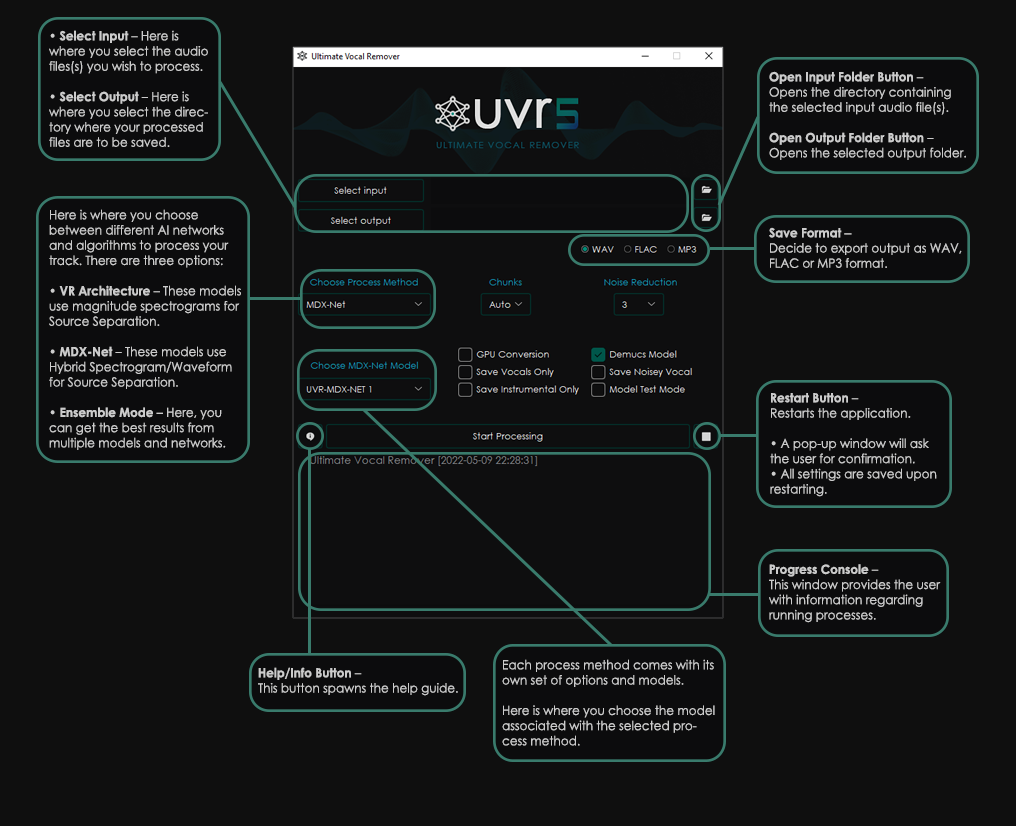

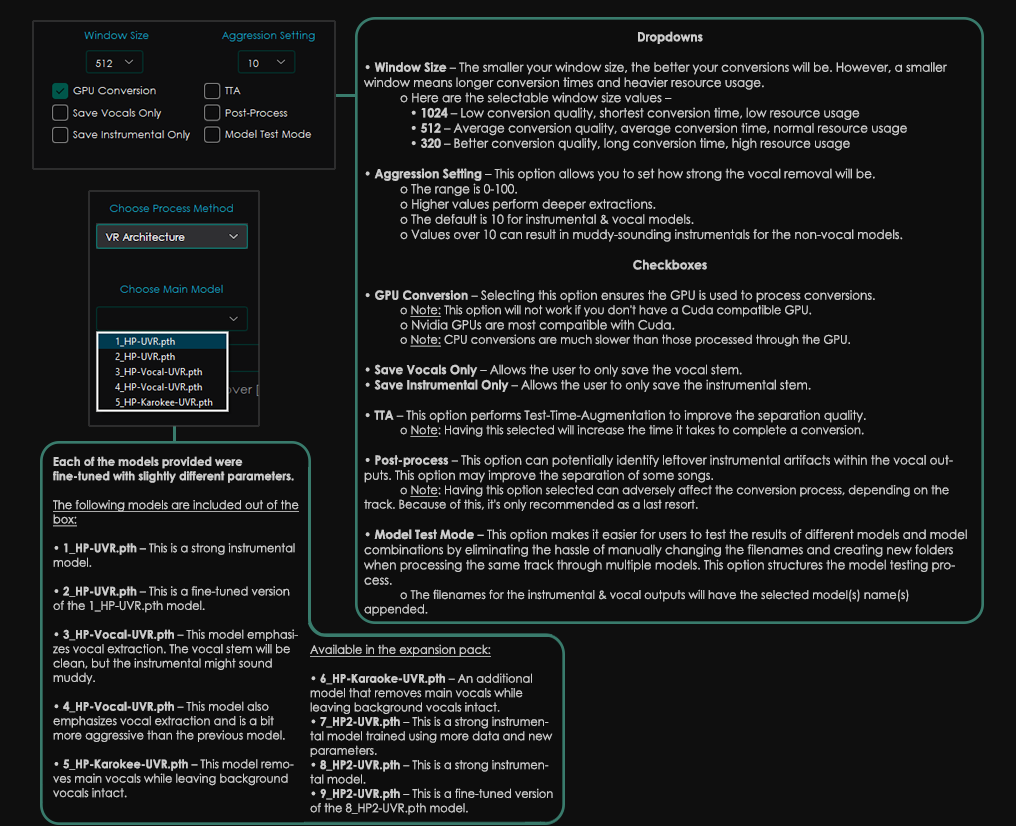

+**VR Architecture Options**

+

+

+

+**VR Architecture Options**

+

+ +

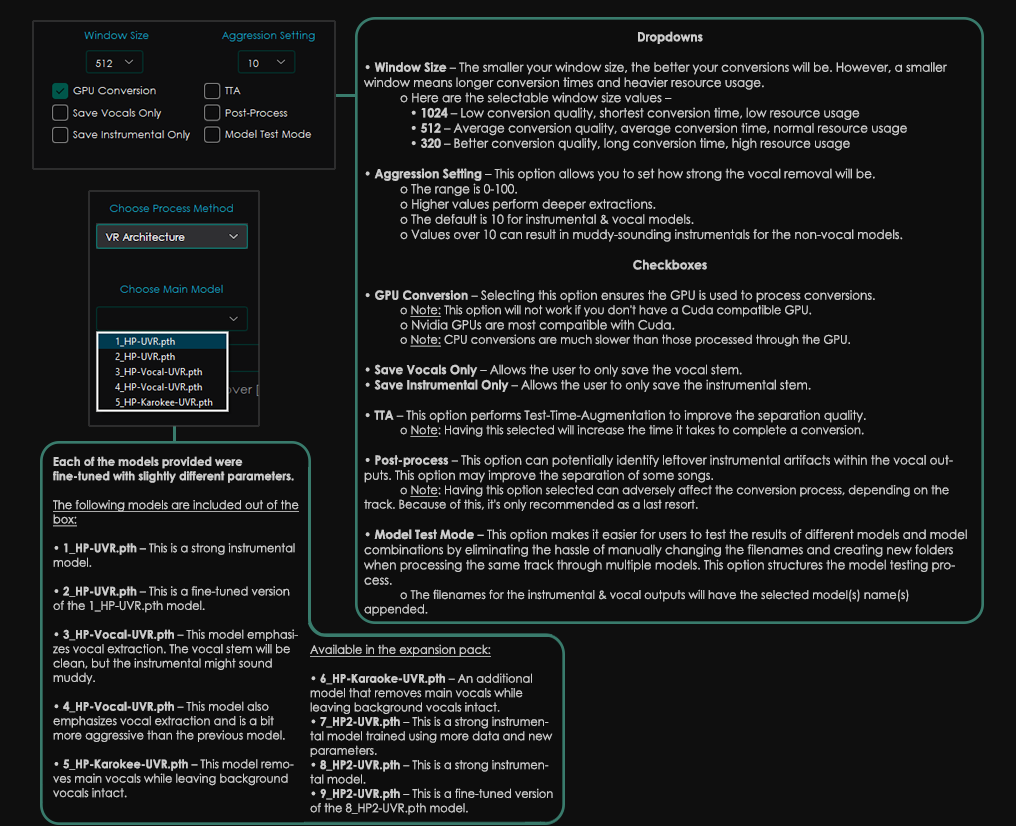

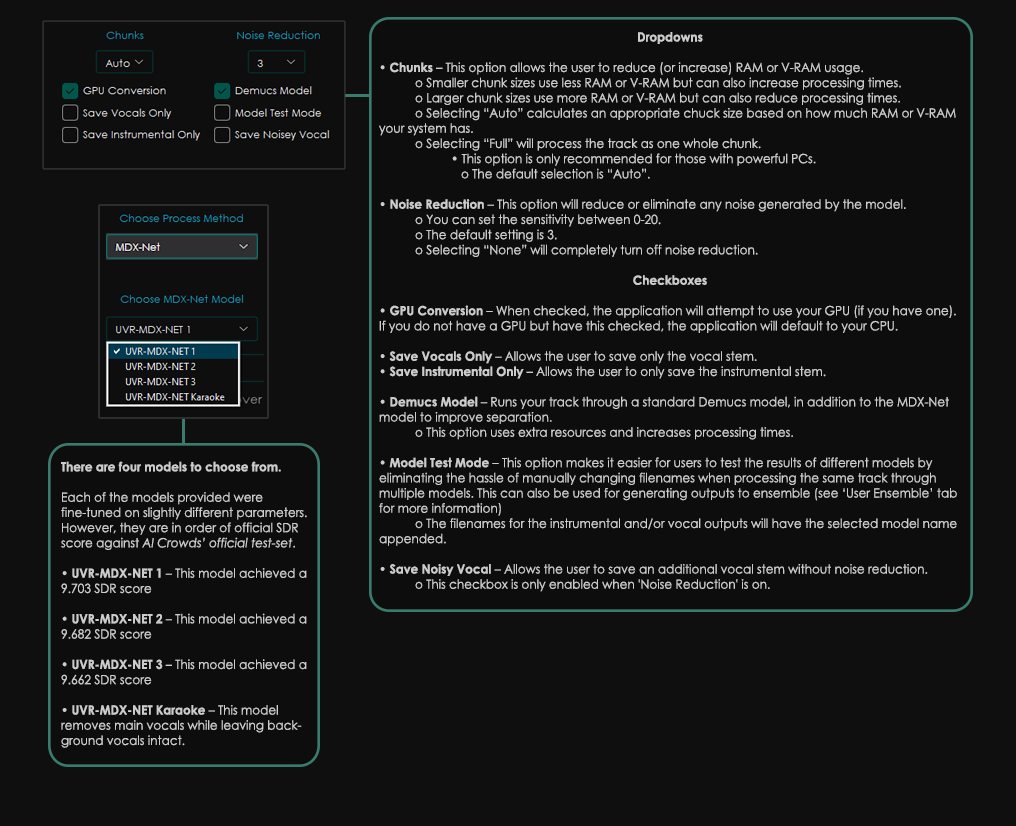

+**MDX-Net Options**

+

+

+

+**MDX-Net Options**

+

+ +

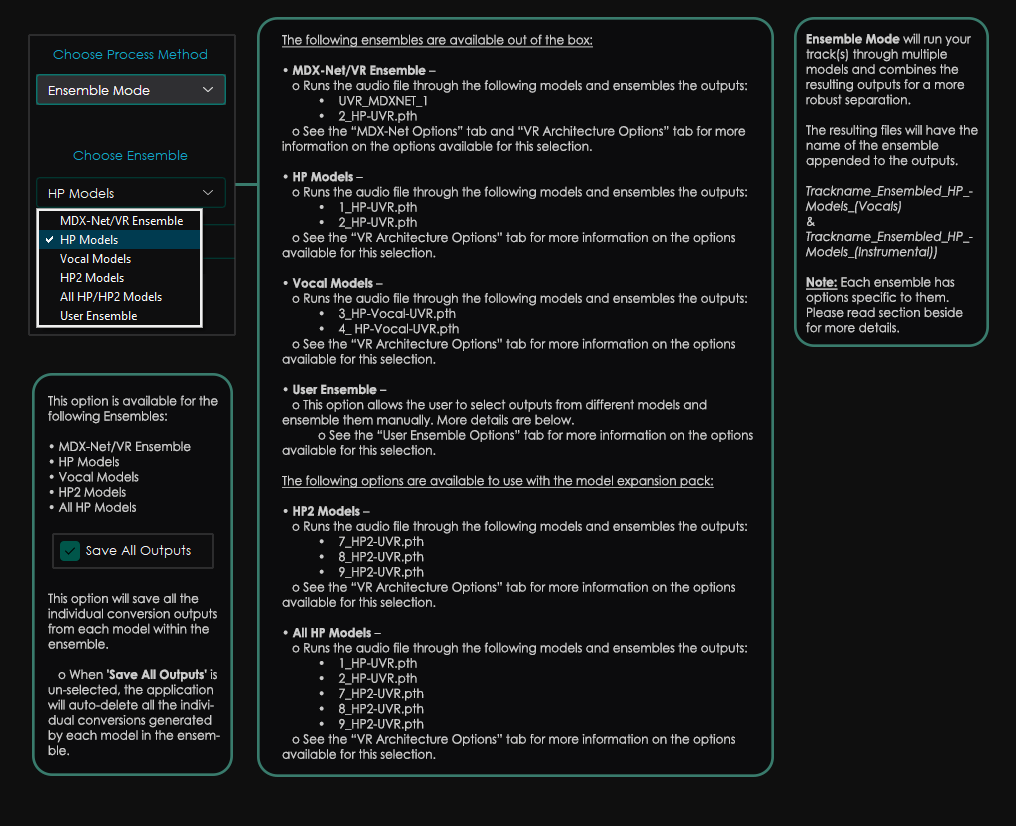

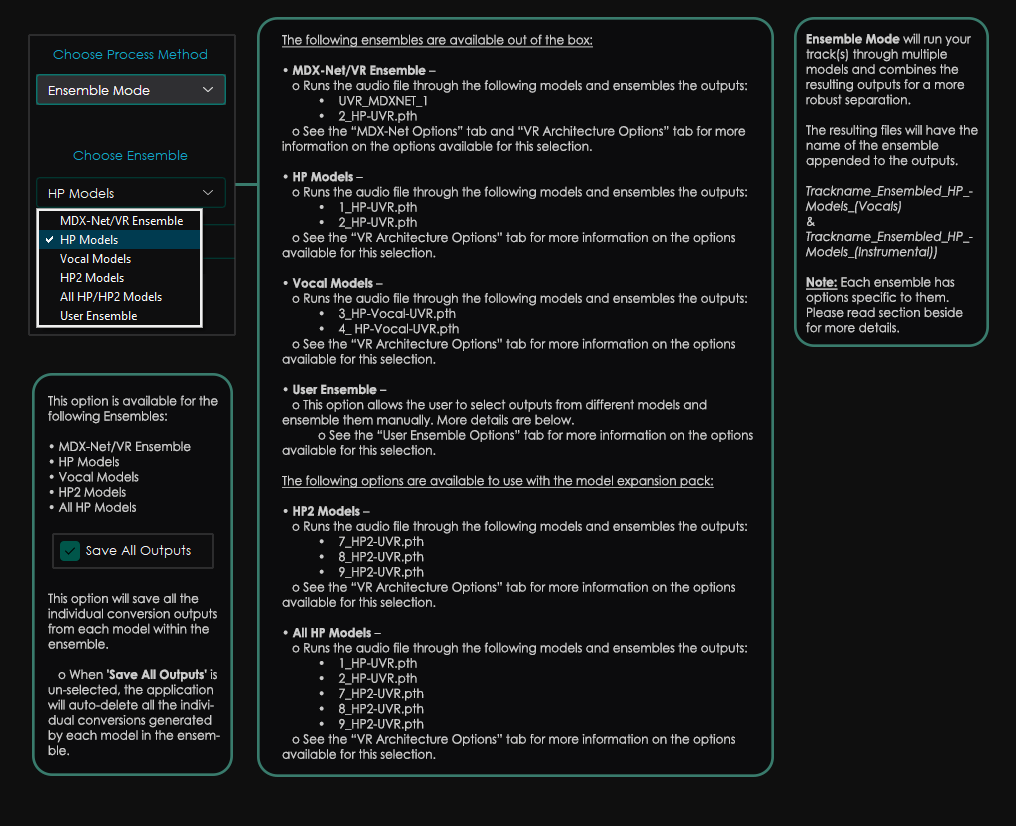

+**Ensemble Options**

+

+

+

+**Ensemble Options**

+

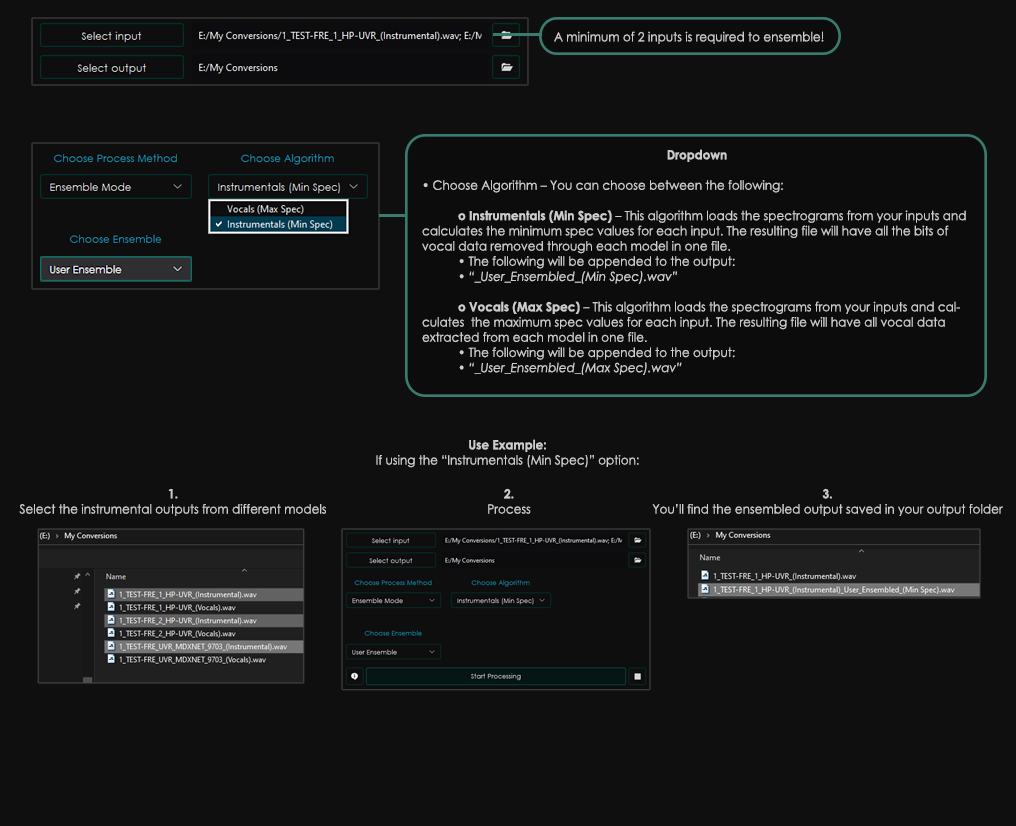

+ +

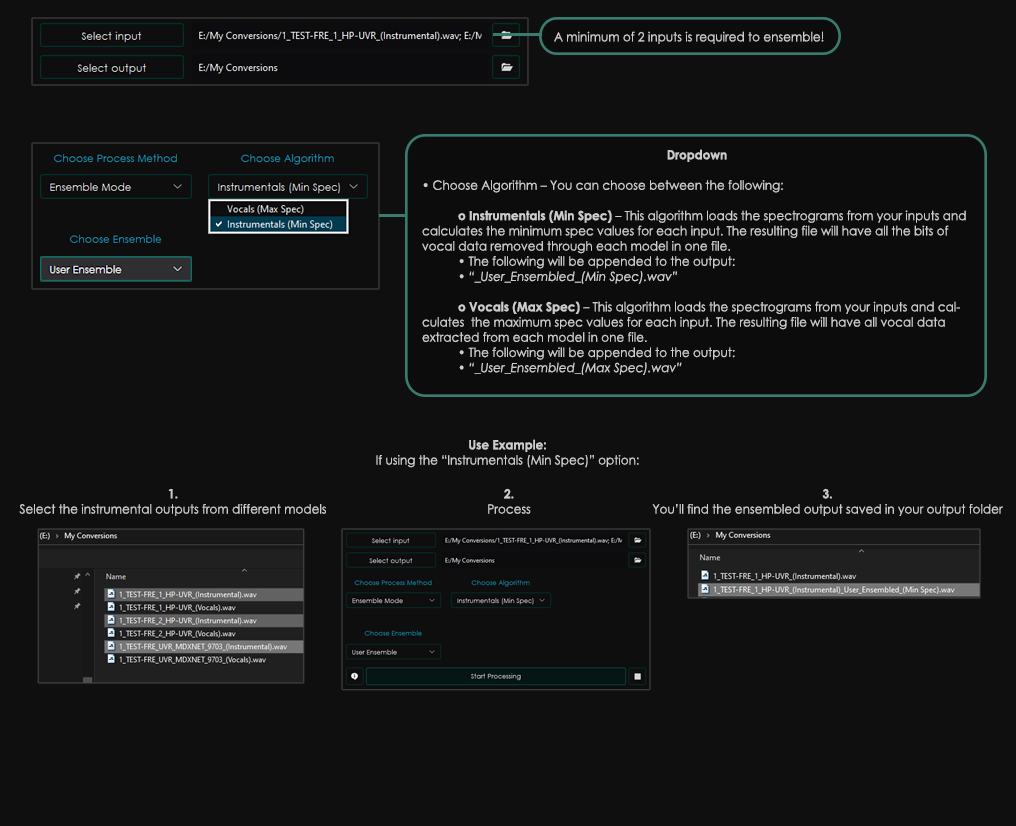

+**User Ensemble**

+

+

+

+**User Ensemble**

+

+ +

+### Other Application Notes

+

+- Nvidia GPUs with at least 8GBs of V-RAM are recommended.

+- This application is only compatible with 64-bit platforms.

+- This application relies on Sox - Sound Exchange for Noise Reduction.

+- This application relies on FFmpeg to process non-wav audio files.

+- The application will automatically remember your settings when closed.

+- Conversion times will significantly depend on your hardware.

+- These models are computationally intensive. Processing times might be slow on older or budget hardware. Please proceed with caution and pay attention to your PC to ensure it doesn't overheat. ***We are not responsible for any hardware damage.***

## Change Log

- **v4 vs. v5**

- The v5 models significantly outperform the v4 models.

- - The extraction's aggressiveness can be adjusted using the "Aggression Setting". The default value of 10 is optimal for most tracks.

+ - The extraction's aggressiveness can be adjusted using the "Aggression Setting." The default value of 10 is optimal for most tracks.

- All v2 and v4 models have been removed.

- - Ensemble Mode added - This allows the user to get the strongest result from each model.

+ - Ensemble Mode added - This allows the user to get the most robust result from each model.

- Stacked models have been entirely removed.

- - Stacked model feature has been replaced by the new aggression setting and model ensembling.

+ The new aggression setting and model ensembling have replaced the stacked model feature.

- The NFFT, HOP_SIZE, and SR values are now set internally.

+ - The MDX-NET AI engine and models have been added.

+ - This is a brand new feature added to the UVR GUI.

+ - 4 MDX-Net models trained by UVR developers are included in this package.

+ - The MDX-Net models provided were trained by the UVR core developers

+ - This network is less resource-intensive but incredibly powerful.

+ - MDX-Net is a Hybrid Waveform/Spectrogram network.

-- **Upcoming v5.2.0 Update**

- - MDX-NET AI engine and model support

+## Troubleshooting

-## Installation

+### Common Issues

-The application was made with Tkinter for cross-platform compatibility, so it should work with Windows, Mac, and Linux systems. However, this application has only been tested on Windows 10 & Linux Ubuntu.

+- If FFmpeg is not installed, the application will throw an error if the user attempts to convert a non-WAV file.

-### Install Required Applications & Packages

+### Issue Reporting

-1. Download & install Python 3.9.8 [here](https://www.python.org/ftp/python/3.9.8/python-3.9.8-amd64.exe) (Windows link)

- - **Note:** Ensure the *"Add Python 3.9 to PATH"* box is checked

-2. Download the Source code zip here - https://github.com/Anjok07/ultimatevocalremovergui/archive/refs/heads/master.zip

-3. Download the models.zip here - https://github.com/Anjok07/ultimatevocalremovergui/releases/download/v5.1.0/models.zip

+Please be as detailed as possible when posting a new issue.

+

+If possible, click the "Help Guide" button to the left of the "Start Processing" button and navigate to the "Error Log" tab for detailed error information that can be provided to us.

+

+## Manual Installation (For Developers)

+

+These instructions are for those installing UVR v5.2.0 **manually** only.

+

+1. Download & install Python 3.9 or lower (but no lower than 3.6) [here](https://www.python.org/downloads/)

+ - **Note:** Ensure the *"Add Python to PATH"* box is checked

+2. Download the Source code zip [here]()

+3. Download the models.zip [here]()

4. Extract the *ultimatevocalremovergui-master* folder within ultimatevocalremovergui-master.zip where ever you wish.

5. Extract the *models* folder within models.zip to the *ultimatevocalremovergui-master* directory.

- - **Note:** At this time, the GUI is hardcoded to run the models included in this package only.

6. Open the command prompt from the ultimatevocalremovergui-master directory and run the following commands, separately -

```

@@ -50,163 +116,36 @@ pip install --no-cache-dir -r requirements.txt

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 torchaudio==0.9.0 -f https://download.pytorch.org/whl/torch_stable.html

```

-### FFmpeg

+- FFmpeg

-FFmpeg must be installed and configured for the application to process any track that isn't a *.wav* file. Instructions for installing FFmpeg can be found on YouTube, WikiHow, Reddit, GitHub, and many other sources around the web.

+ - FFmpeg must be installed and configured for the application to process any track that isn't a *.wav* file. Instructions for installing FFmpeg is provided in the "More Info" tab within the Help Guide.

-- **Note:** If you are experiencing any errors when attempting to process any media files, not in the *.wav* format, please ensure FFmpeg is installed & configured correctly.

+- Running the GUI & Models

-### Running the GUI & Models

-

-- Open the file labeled *'VocalRemover.py'*.

- - It's recommended that you create a shortcut for the file labeled *'VocalRemover.py'* to your desktop for easy access.

- - **Note:** If you are unable to open the *'VocalRemover.py'* file, please go to the [**troubleshooting**](https://github.com/Anjok07/ultimatevocalremovergui/tree/beta#troubleshooting) section below.

-- **Note:** All output audio files will be in the *'.wav'* format.

-

-## Option Guide

-

-### Main Checkboxes

-- **GPU Conversion** - Selecting this option ensures the GPU is used to process conversions.

- - **Note:** This option will not work if you don't have a Cuda compatible GPU.

- - Nvidia GPUs are most compatible with Cuda.

- - **Note:** CPU conversions are much slower than those processed through the GPU.

-- **Post-process** - This option can potentially identify leftover instrumental artifacts within the vocal outputs. This option may improve the separation of *some* songs.

- - **Note:** Having this option selected can adversely affect the conversion process, depending on the track. Because of this, it's only recommended as a last resort.

-- **TTA** - This option performs Test-Time-Augmentation to improve the separation quality.

- - **Note:** Having this selected will increase the time it takes to complete a conversion.

-- **Output Image** - Selecting this option will include the spectrograms in *.jpg* format for the instrumental & vocal audio outputs.

-

-### Special Checkboxes

-- **Model Test Mode** - Only selectable when using the "*Single Model*" conversion method. This option makes it easier for users to test the results of different models and model combinations by eliminating the hassle of manually changing the filenames and creating new folders when processing the same track through multiple models. This option structures the model testing process.

- - When *' Model Test Mode'* is selected, the application will auto-generate a new folder in the *' Save to'* path you have chosen.

- - The new auto-generated folder will be named after the model(s) selected.

- - The output audio files will be saved to the auto-generated directory.

- - The filenames for the instrumental & vocal outputs will have the selected model(s) name(s) appended.

-- **Save All Outputs** - Only selectable when using the "*Ensemble Mode*" conversion method. This option will save all of the individual conversion outputs from each model within the ensemble.

- - When *'Save All Outputs'* is un-selected, the application will auto-delete all of the individual conversions generated by each model in the ensemble.

-

-### Additional Options

-

-- **Window Size** - The smaller your window size, the better your conversions will be. However, a smaller window means longer conversion times and heavier resource usage.

- - Here are the selectable window size values -

- - **1024** - Low conversion quality, shortest conversion time, low resource usage

- - **512** - Average conversion quality, average conversion time, normal resource usage

- - **320** - Better conversion quality, long conversion time, high resource usage

-- **Aggression Setting** - This option allows you to set how strong the vocal removal will be.

- - The range is 0-100.

- - Higher values perform deeper extractions.

- - The default is 10 for instrumental & vocal models.

- - Values over 10 can result in muddy-sounding instrumentals for the non-vocal models.

-- **Default Values:**

- - **Window Size** - 512

- - **Aggression Setting** - 10 (optimal setting for all conversions)

-

-### Other Buttons

-

-- **Open Export Directory** - This button will open your 'save to' directory. You will find it to the right of the *'Start Conversion'* button.

-

-## Models Included

-

-All of the models included in the release were trained on large datasets containing a diverse range of music genres and different training parameters.

-

-**Please Note:** Do not change the name of the models provided! The required parameters are specified and appended to the end of the filenames.

-

-- **Model Network Types**

- - **HP2** - The model layers are much larger. However, this makes them resource-heavy.

- - **HP** - The model layers are the standard size for UVR v5.

-

-### Main Models

-

-- **HP2_3BAND_44100_MSB2.pth** - This is a strong instrumental model trained using more data and new parameters.

-- **HP2_4BAND_44100_1.pth** - This is a strong instrumental model.

-- **HP2_4BAND_44100_2.pth** - This is a fine tuned version of the HP2_4BAND_44100_1.pth model.

-- **HP_4BAND_44100_A.pth** - This is a strong instrumental model.

-- **HP_4BAND_44100_B.pth** - This is a fine tuned version of the HP_4BAND_44100_A.pth model.

-- **HP_KAROKEE_4BAND_44100_SN.pth** - This is a model that removes main vocals while leaving background vocals intact.

-- **HP_Vocal_4BAND_44100.pth** - This model emphasizes vocal extraction. The vocal stem will be clean, but the instrumental might sound muddy.

-- **HP_Vocal_AGG_4BAND_44100.pth** - This model also emphasizes vocal extraction and is a bit more aggressive than the previous model.

-

-## Choose Conversion Method

-

-### Single Model

-

-Run your tracks through a single model only. This is the default conversion method.

-

-- **Choose Main Model** - Here is where you choose the main model to perform a deep vocal removal.

- - The *'Model Test Mode'* option makes it easier for users to test different models on given tracks.

-

-### Ensemble Mode

-

-Ensemble Mode will run your track(s) through multiple models and combine the resulting outputs for a more robust separation. Higher level ensembles will have stronger separations, as they use more models.

-

-- **Choose Ensemble** - Here, choose the ensemble you wish to run your track through.

- - **There are 4 ensembles you can choose from:**

- - **HP1 Models** - Level 1 Ensemble

- - **HP2 Models** - Level 2 Ensemble

- - **All HP Models** - Level 3 Ensemble

- - **Vocal Models** - Level 1 Vocal Ensemble

- - A directory is auto-generated with the name of the ensemble. This directory will contain all of the individual outputs generated by the ensemble and auto-delete once the conversions are complete if the *'Save All Outputs'* option is unchecked.

- - When checked, the *'Save All Outputs'* option saves all of the outputs generated by each model in the ensemble.

-

-- **List of models included in each ensemble:**

- - **HP1 Models**

- - HP_4BAND_44100_A

- - HP_4BAND_44100_B

- - **HP2 Models**

- - HP2_4BAND_44100_1

- - HP2_4BAND_44100_2

- - HP2_3BAND_44100_MSB2

- - **All HP Models**

- - HP_4BAND_44100_A

- - HP_4BAND_44100_B

- - HP2_4BAND_44100_1

- - HP2_4BAND_44100_2

- - HP2_3BAND_44100_MSB2

- - **Vocal Models**

- - HP_Vocal_4BAND_44100

- - HP_Vocal_AGG_4BAND_44100

-

-- **Please Note:** Ensemble mode is very resource heavy!

-

-## Other GUI Notes

-

-- The application will automatically remember your *'save to'* path upon closing and reopening until it's changed.

- - **Note:** The last directory accessed within the application will also be remembered.

-- Multiple conversions are supported.

-- The ability to drag & drop audio files to convert has also been added.

-- Conversion times will significantly depend on your hardware.

- - **Note:** This application will *not* be friendly to older or budget hardware. Please proceed with caution! Please pay attention to your PC and make sure it doesn't overheat. ***We are not responsible for any hardware damage.***

-

-## Troubleshooting

-

-### Common Issues

-

-- This application is not compatible with 32-bit versions of Python. Please make sure your version of Python is 64-bit.

-- If FFmpeg is not installed, the application will throw an error if the user attempts to convert a non-WAV file.

-

-### Issue Reporting

-

-Please be as detailed as possible when posting a new issue. Make sure to provide any error outputs and/or screenshots/gif's to give us a clearer understanding of the issue you are experiencing.

-

-If the *'VocalRemover.py'* file won't open *under any circumstances* and all other resources have been exhausted, please do the following -

-

-1. Open the cmd prompt from the ultimatevocalremovergui-master directory

-2. Run the following command -

-```

-python VocalRemover.py

-```

-3. Copy and paste the error output shown in the cmd prompt to the issues center on the GitHub repository.

+ - Open the file labeled *'UVR.py'*.

+ - It's recommended that you create a shortcut for the file labeled *'UVR.py'* to your desktop for easy access.

+ - **Note:** If you are unable to open the *'UVR.py'* file, please go to the **troubleshooting** section below.

+ - **Note:** All output audio files will be in the *'.wav'* format.

## License

The **Ultimate Vocal Remover GUI** code is [MIT-licensed](LICENSE).

-- **Please Note:** For all third-party application developers who wish to use our models, please honor the MIT license by providing credit to UVR and its developers Anjok07, aufr33, & tsurumeso.

+- **Please Note:** For all third-party application developers who wish to use our models, please honor the MIT license by providing credit to UVR and its developers.

+

+## Credits

+

+- [DilanBoskan](https://github.com/DilanBoskan) - Your contributions at the start of this project were essential to the success of UVR. Thank you!

+- [Bas Curtiz](https://www.youtube.com/user/bascurtiz) - Designed the official UVR logo, icon, banner, splash screen, and interface.

+- [tsurumeso](https://github.com/tsurumeso) - Developed the original VR Architecture code.

+- [Kuielab & Woosung Choi](https://github.com/kuielab) - Developed the original MDX-Net AI code.

+- [Adefossez & Demucs](https://github.com/facebookresearch/demucs) - Developed the original MDX-Net AI code.

+- [Hv](https://github.com/NaJeongMo/Colab-for-MDX_B) - Helped implement chunks into the MDX-Net AI code. Thank you!

## Contributing

-- For anyone interested in the ongoing development of **Ultimate Vocal Remover GUI**, please send us a pull request, and we will review it. This project is 100% open-source and free for anyone to use and/or modify as they wish.

-- Please note that we do not maintain or directly support any of tsurumesos AI application code. We only maintain the development and support for the **Ultimate Vocal Remover GUI** and the models provided.

+- For anyone interested in the ongoing development of **Ultimate Vocal Remover GUI**, please send us a pull request, and we will review it. This project is 100% open-source and free for anyone to use and modify as they wish.

+- We only maintain the development and support for the **Ultimate Vocal Remover GUI** and the models provided.

## References

- [1] Takahashi et al., "Multi-scale Multi-band DenseNets for Audio Source Separation", https://arxiv.org/pdf/1706.09588.pdf

diff --git a/UVR.py b/UVR.py

new file mode 100644

index 0000000..6227261

--- /dev/null

+++ b/UVR.py

@@ -0,0 +1,1729 @@

+# GUI modules

+import os

+try:

+ with open(os.path.join(os.getcwd(), 'tmp', 'splash.txt'), 'w') as f:

+ f.write('1')

+except:

+ pass

+import pyperclip

+from gc import freeze

+import tkinter as tk

+from tkinter import *

+from tkinter.tix import *

+import webbrowser

+from tracemalloc import stop

+import lib_v5.sv_ttk

+import tkinter.ttk as ttk

+import tkinter.messagebox

+import tkinter.filedialog

+import tkinter.font

+from tkinterdnd2 import TkinterDnD, DND_FILES # Enable Drag & Drop

+import pyglet,tkinter

+from datetime import datetime

+# Images

+from PIL import Image

+from PIL import ImageTk

+import pickle # Save Data

+# Other Modules

+

+# Pathfinding

+import pathlib

+import sys

+import subprocess

+from collections import defaultdict

+# Used for live text displaying

+import queue

+import threading # Run the algorithm inside a thread

+from subprocess import call

+from pathlib import Path

+import ctypes as ct

+import subprocess # Run python file

+import inference_MDX

+import inference_v5

+import inference_v5_ensemble

+

+try:

+ with open(os.path.join(os.getcwd(), 'tmp', 'splash.txt'), 'w') as f:

+ f.write('1')

+except:

+ pass

+

+# Change the current working directory to the directory

+# this file sits in

+if getattr(sys, 'frozen', False):

+ # If the application is run as a bundle, the PyInstaller bootloader

+ # extends the sys module by a flag frozen=True and sets the app

+ # path into variable _MEIPASS'.

+ base_path = sys._MEIPASS

+else:

+ base_path = os.path.dirname(os.path.abspath(__file__))

+

+os.chdir(base_path) # Change the current working directory to the base path

+

+#Images

+instrumentalModels_dir = os.path.join(base_path, 'models')

+banner_path = os.path.join(base_path, 'img', 'UVR-banner.png')

+efile_path = os.path.join(base_path, 'img', 'file.png')

+stop_path = os.path.join(base_path, 'img', 'stop.png')

+help_path = os.path.join(base_path, 'img', 'help.png')

+gen_opt_path = os.path.join(base_path, 'img', 'gen_opt.png')

+mdx_opt_path = os.path.join(base_path, 'img', 'mdx_opt.png')

+vr_opt_path = os.path.join(base_path, 'img', 'vr_opt.png')

+ense_opt_path = os.path.join(base_path, 'img', 'ense_opt.png')

+user_ens_opt_path = os.path.join(base_path, 'img', 'user_ens_opt.png')

+credits_path = os.path.join(base_path, 'img', 'credits.png')

+

+DEFAULT_DATA = {

+ 'exportPath': '',

+ 'inputPaths': [],

+ 'saveFormat': 'Wav',

+ 'gpu': False,

+ 'postprocess': False,

+ 'tta': False,

+ 'save': True,

+ 'output_image': False,

+ 'window_size': '512',

+ 'agg': 10,

+ 'modelFolder': False,

+ 'modelInstrumentalLabel': '',

+ 'aiModel': 'MDX-Net',

+ 'algo': 'Instrumentals (Min Spec)',

+ 'ensChoose': 'MDX-Net/VR Ensemble',

+ 'useModel': 'instrumental',

+ 'lastDir': None,

+ 'break': False,

+ #MDX-Net

+ 'demucsmodel': True,

+ 'non_red': False,

+ 'noise_reduc': True,

+ 'voc_only': False,

+ 'inst_only': False,

+ 'chunks': 'Auto',

+ 'noisereduc_s': '3',

+ 'mixing': 'default',

+ 'mdxnetModel': 'UVR-MDX-NET 1',

+}

+

+def open_image(path: str, size: tuple = None, keep_aspect: bool = True, rotate: int = 0) -> ImageTk.PhotoImage:

+ """

+ Open the image on the path and apply given settings\n

+ Paramaters:

+ path(str):

+ Absolute path of the image

+ size(tuple):

+ first value - width

+ second value - height

+ keep_aspect(bool):

+ keep aspect ratio of image and resize

+ to maximum possible width and height

+ (maxima are given by size)

+ rotate(int):

+ clockwise rotation of image

+ Returns(ImageTk.PhotoImage):

+ Image of path

+ """

+ img = Image.open(path).convert(mode='RGBA')

+ ratio = img.height/img.width

+ img = img.rotate(angle=-rotate)

+ if size is not None:

+ size = (int(size[0]), int(size[1]))

+ if keep_aspect:

+ img = img.resize((size[0], int(size[0] * ratio)), Image.ANTIALIAS)

+ else:

+ img = img.resize(size, Image.ANTIALIAS)

+ return ImageTk.PhotoImage(img)

+

+def save_data(data):

+ """

+ Saves given data as a .pkl (pickle) file

+

+ Paramters:

+ data(dict):

+ Dictionary containing all the necessary data to save

+ """

+ # Open data file, create it if it does not exist

+ with open('data.pkl', 'wb') as data_file:

+ pickle.dump(data, data_file)

+

+def load_data() -> dict:

+ """

+ Loads saved pkl file and returns the stored data

+

+ Returns(dict):

+ Dictionary containing all the saved data

+ """

+ try:

+ with open('data.pkl', 'rb') as data_file: # Open data file

+ data = pickle.load(data_file)

+

+ return data

+ except (ValueError, FileNotFoundError):

+ # Data File is corrupted or not found so recreate it

+ save_data(data=DEFAULT_DATA)

+

+ return load_data()

+

+def drop(event, accept_mode: str = 'files'):

+ """

+ Drag & Drop verification process

+ """

+ global dnd

+ global dnddir

+

+ path = event.data

+

+ if accept_mode == 'folder':

+ path = path.replace('{', '').replace('}', '')

+ if not os.path.isdir(path):

+ tk.messagebox.showerror(title='Invalid Folder',

+ message='Your given export path is not a valid folder!')

+ return

+ # Set Variables

+ root.exportPath_var.set(path)

+ elif accept_mode == 'files':

+ # Clean path text and set path to the list of paths

+ path = path.replace('{', '')

+ path = path.split('} ')

+ path[-1] = path[-1].replace('}', '')

+ # Set Variables

+ dnd = 'yes'

+ root.inputPaths = path

+ root.update_inputPaths()

+ dnddir = os.path.dirname(path[0])

+ print('dnddir ', str(dnddir))

+ else:

+ # Invalid accept mode

+ return

+

+class ThreadSafeConsole(tk.Text):

+ """

+ Text Widget which is thread safe for tkinter

+ """

+ def __init__(self, master, **options):

+ tk.Text.__init__(self, master, **options)

+ self.queue = queue.Queue()

+ self.update_me()

+

+ def write(self, line):

+ self.queue.put(line)

+

+ def clear(self):

+ self.queue.put(None)

+

+ def update_me(self):

+ self.configure(state=tk.NORMAL)

+ try:

+ while 1:

+ line = self.queue.get_nowait()

+ if line is None:

+ self.delete(1.0, tk.END)

+ else:

+ self.insert(tk.END, str(line))

+ self.see(tk.END)

+ self.update_idletasks()

+ except queue.Empty:

+ pass

+ self.configure(state=tk.DISABLED)

+ self.after(100, self.update_me)

+

+class MainWindow(TkinterDnD.Tk):

+ # --Constants--

+ # Layout

+ IMAGE_HEIGHT = 140

+ FILEPATHS_HEIGHT = 85

+ OPTIONS_HEIGHT = 275

+ CONVERSIONBUTTON_HEIGHT = 35

+ COMMAND_HEIGHT = 200

+ PROGRESS_HEIGHT = 30

+ PADDING = 10

+

+ COL1_ROWS = 11

+ COL2_ROWS = 11

+

+ def __init__(self):

+ # Run the __init__ method on the tk.Tk class

+ super().__init__()

+

+ # Calculate window height

+ height = self.IMAGE_HEIGHT + self.FILEPATHS_HEIGHT + self.OPTIONS_HEIGHT

+ height += self.CONVERSIONBUTTON_HEIGHT + self.COMMAND_HEIGHT + self.PROGRESS_HEIGHT

+ height += self.PADDING * 5 # Padding

+

+ # --Window Settings--

+ self.title('Ultimate Vocal Remover')

+ # Set Geometry and Center Window

+ self.geometry('{width}x{height}+{xpad}+{ypad}'.format(

+ width=620,

+ height=height,

+ xpad=int(self.winfo_screenwidth()/2 - 635/2),

+ ypad=int(self.winfo_screenheight()/2 - height/2 - 30)))

+ self.configure(bg='#0e0e0f') # Set background color to #0c0c0d

+ self.protocol("WM_DELETE_WINDOW", self.save_values)

+ self.resizable(False, False)

+ self.update()

+

+ # --Variables--

+ self.logo_img = open_image(path=banner_path,

+ size=(self.winfo_width(), 9999))

+ self.efile_img = open_image(path=efile_path,

+ size=(20, 20))

+ self.stop_img = open_image(path=stop_path,

+ size=(20, 20))

+ self.help_img = open_image(path=help_path,

+ size=(20, 20))

+ self.gen_opt_img = open_image(path=gen_opt_path,

+ size=(1016, 826))

+ self.mdx_opt_img = open_image(path=mdx_opt_path,

+ size=(1016, 826))

+ self.vr_opt_img = open_image(path=vr_opt_path,

+ size=(1016, 826))

+ self.ense_opt_img = open_image(path=ense_opt_path,

+ size=(1016, 826))

+ self.user_ens_opt_img = open_image(path=user_ens_opt_path,

+ size=(1016, 826))

+ self.credits_img = open_image(path=credits_path,

+ size=(100, 100))

+

+ self.instrumentalLabel_to_path = defaultdict(lambda: '')

+ self.lastInstrumentalModels = []

+

+ # -Tkinter Value Holders-

+ data = load_data()

+ # Paths

+ self.inputPaths = data['inputPaths']

+ self.inputPathop_var = tk.StringVar(value=data['inputPaths'])

+ self.exportPath_var = tk.StringVar(value=data['exportPath'])

+ self.saveFormat_var = tk.StringVar(value=data['saveFormat'])

+

+ # Processing Options

+ self.gpuConversion_var = tk.BooleanVar(value=data['gpu'])

+ self.postprocessing_var = tk.BooleanVar(value=data['postprocess'])

+ self.tta_var = tk.BooleanVar(value=data['tta'])

+ self.save_var = tk.BooleanVar(value=data['save'])

+ self.outputImage_var = tk.BooleanVar(value=data['output_image'])

+ # MDX-NET Specific Processing Options

+ self.demucsmodel_var = tk.BooleanVar(value=data['demucsmodel'])

+ self.non_red_var = tk.BooleanVar(value=data['non_red'])

+ self.noisereduc_var = tk.BooleanVar(value=data['noise_reduc'])

+ self.chunks_var = tk.StringVar(value=data['chunks'])

+ self.noisereduc_s_var = tk.StringVar(value=data['noisereduc_s'])

+ self.mixing_var = tk.StringVar(value=data['mixing']) #dropdown

+ # Models

+ self.instrumentalModel_var = tk.StringVar(value=data['modelInstrumentalLabel'])

+ # Model Test Mode

+ self.modelFolder_var = tk.BooleanVar(value=data['modelFolder'])

+ # Constants

+ self.winSize_var = tk.StringVar(value=data['window_size'])

+ self.agg_var = tk.StringVar(value=data['agg'])

+ # Instrumental or Vocal Only

+ self.voc_only_var = tk.BooleanVar(value=data['voc_only'])

+ self.inst_only_var = tk.BooleanVar(value=data['inst_only'])

+ # Choose Conversion Method

+ self.aiModel_var = tk.StringVar(value=data['aiModel'])

+ self.last_aiModel = self.aiModel_var.get()

+ # Choose Conversion Method

+ self.algo_var = tk.StringVar(value=data['algo'])

+ self.last_algo = self.aiModel_var.get()

+ # Choose Ensemble

+ self.ensChoose_var = tk.StringVar(value=data['ensChoose'])

+ self.last_ensChoose = self.ensChoose_var.get()

+ # Choose MDX-NET Model

+ self.mdxnetModel_var = tk.StringVar(value=data['mdxnetModel'])

+ self.last_mdxnetModel = self.mdxnetModel_var.get()

+ # Other

+ self.inputPathsEntry_var = tk.StringVar(value='')

+ self.lastDir = data['lastDir'] # nopep8

+ self.progress_var = tk.IntVar(value=0)

+ # Font

+ pyglet.font.add_file('lib_v5/fonts/centurygothic/GOTHIC.TTF')

+ self.font = tk.font.Font(family='Century Gothic', size=10)

+ self.fontRadio = tk.font.Font(family='Century Gothic', size=8)

+ # --Widgets--

+ self.create_widgets()

+ self.configure_widgets()

+ self.bind_widgets()

+ self.place_widgets()

+

+ self.update_available_models()

+ self.update_states()

+ self.update_loop()

+

+

+

+

+ # -Widget Methods-

+ def create_widgets(self):

+ """Create window widgets"""

+ self.title_Label = tk.Label(master=self, bg='#0e0e0f',

+ image=self.logo_img, compound=tk.TOP)

+ self.filePaths_Frame = ttk.Frame(master=self)

+ self.fill_filePaths_Frame()

+

+ self.options_Frame = ttk.Frame(master=self)

+ self.fill_options_Frame()

+

+ self.conversion_Button = ttk.Button(master=self,

+ text='Start Processing',

+ command=self.start_conversion)

+ self.stop_Button = ttk.Button(master=self,

+ image=self.stop_img,

+ command=self.restart)

+ self.help_Button = ttk.Button(master=self,

+ image=self.help_img,

+ command=self.help)

+

+ #ttk.Button(win, text= "Open", command= open_popup).pack()

+

+ self.efile_e_Button = ttk.Button(master=self,

+ image=self.efile_img,

+ command=self.open_exportPath_filedialog)

+

+ self.efile_i_Button = ttk.Button(master=self,

+ image=self.efile_img,

+ command=self.open_inputPath_filedialog)

+

+ self.progressbar = ttk.Progressbar(master=self, variable=self.progress_var)

+

+ self.command_Text = ThreadSafeConsole(master=self,

+ background='#0e0e0f',fg='#898b8e', font=('Century Gothic', 11),

+ borderwidth=0,)

+

+ self.command_Text.write(f'Ultimate Vocal Remover [{datetime.now().strftime("%Y-%m-%d %H:%M:%S")}]\n')

+

+

+ def configure_widgets(self):

+ """Change widget styling and appearance"""

+

+ #ttk.Style().configure('TCheckbutton', background='#0e0e0f',

+ # font=self.font, foreground='#d4d4d4')

+ #ttk.Style().configure('TRadiobutton', background='#0e0e0f',

+ # font=("Century Gothic", "8", "bold"), foreground='#d4d4d4')

+ #ttk.Style().configure('T', font=self.font, foreground='#d4d4d4')

+

+ #s = ttk.Style()

+ #s.configure('TButton', background='blue', foreground='black', font=('Century Gothic', '9', 'bold'), relief="groove")

+

+

+ def bind_widgets(self):

+ """Bind widgets to the drag & drop mechanic"""

+ self.filePaths_musicFile_Button.drop_target_register(DND_FILES)

+ self.filePaths_musicFile_Entry.drop_target_register(DND_FILES)

+ self.filePaths_saveTo_Button.drop_target_register(DND_FILES)

+ self.filePaths_saveTo_Entry.drop_target_register(DND_FILES)

+ self.filePaths_musicFile_Button.dnd_bind('<>',

+ lambda e: drop(e, accept_mode='files'))

+ self.filePaths_musicFile_Entry.dnd_bind('<>',

+ lambda e: drop(e, accept_mode='files'))

+ self.filePaths_saveTo_Button.dnd_bind('<>',

+ lambda e: drop(e, accept_mode='folder'))

+ self.filePaths_saveTo_Entry.dnd_bind('<>',

+ lambda e: drop(e, accept_mode='folder'))

+

+ def place_widgets(self):

+ """Place main widgets"""

+ self.title_Label.place(x=-2, y=-2)

+ self.filePaths_Frame.place(x=10, y=155, width=-20, height=self.FILEPATHS_HEIGHT,

+ relx=0, rely=0, relwidth=1, relheight=0)

+ self.options_Frame.place(x=10, y=250, width=-50, height=self.OPTIONS_HEIGHT,

+ relx=0, rely=0, relwidth=1, relheight=0)

+ self.conversion_Button.place(x=50, y=self.IMAGE_HEIGHT + self.FILEPATHS_HEIGHT + self.OPTIONS_HEIGHT + self.PADDING*2, width=-60 - 40, height=self.CONVERSIONBUTTON_HEIGHT,

+ relx=0, rely=0, relwidth=1, relheight=0)

+ self.efile_e_Button.place(x=-45, y=200, width=35, height=30,

+ relx=1, rely=0, relwidth=0, relheight=0)

+ self.efile_i_Button.place(x=-45, y=160, width=35, height=30,

+ relx=1, rely=0, relwidth=0, relheight=0)

+

+ self.stop_Button.place(x=-10 - 35, y=self.IMAGE_HEIGHT + self.FILEPATHS_HEIGHT + self.OPTIONS_HEIGHT + self.PADDING*2, width=35, height=self.CONVERSIONBUTTON_HEIGHT,

+ relx=1, rely=0, relwidth=0, relheight=0)

+ self.help_Button.place(x=-10 - 600, y=self.IMAGE_HEIGHT + self.FILEPATHS_HEIGHT + self.OPTIONS_HEIGHT + self.PADDING*2, width=35, height=self.CONVERSIONBUTTON_HEIGHT,

+ relx=1, rely=0, relwidth=0, relheight=0)

+ self.command_Text.place(x=25, y=self.IMAGE_HEIGHT + self.FILEPATHS_HEIGHT + self.OPTIONS_HEIGHT + self.CONVERSIONBUTTON_HEIGHT + self.PADDING*3, width=-30, height=self.COMMAND_HEIGHT,

+ relx=0, rely=0, relwidth=1, relheight=0)

+ self.progressbar.place(x=25, y=self.IMAGE_HEIGHT + self.FILEPATHS_HEIGHT + self.OPTIONS_HEIGHT + self.CONVERSIONBUTTON_HEIGHT + self.COMMAND_HEIGHT + self.PADDING*4, width=-50, height=self.PROGRESS_HEIGHT,

+ relx=0, rely=0, relwidth=1, relheight=0)

+

+ def fill_filePaths_Frame(self):

+ """Fill Frame with neccessary widgets"""

+ # -Create Widgets-

+ # Save To Option

+ # Select Music Files Option

+

+ # Save To Option

+ self.filePaths_saveTo_Button = ttk.Button(master=self.filePaths_Frame,

+ text='Select output',

+ command=self.open_export_filedialog)

+ self.filePaths_saveTo_Entry = ttk.Entry(master=self.filePaths_Frame,

+

+ textvariable=self.exportPath_var,

+ state=tk.DISABLED

+ )

+ # Select Music Files Option

+ self.filePaths_musicFile_Button = ttk.Button(master=self.filePaths_Frame,

+ text='Select input',

+ command=self.open_file_filedialog)

+ self.filePaths_musicFile_Entry = ttk.Entry(master=self.filePaths_Frame,

+ textvariable=self.inputPathsEntry_var,

+ state=tk.DISABLED

+ )

+

+

+ # -Place Widgets-

+

+ # Select Music Files Option

+ self.filePaths_musicFile_Button.place(x=0, y=5, width=0, height=-10,

+ relx=0, rely=0, relwidth=0.3, relheight=0.5)

+ self.filePaths_musicFile_Entry.place(x=10, y=2.5, width=-50, height=-5,

+ relx=0.3, rely=0, relwidth=0.7, relheight=0.5)

+

+ # Save To Option

+ self.filePaths_saveTo_Button.place(x=0, y=5, width=0, height=-10,

+ relx=0, rely=0.5, relwidth=0.3, relheight=0.5)

+ self.filePaths_saveTo_Entry.place(x=10, y=2.5, width=-50, height=-5,

+ relx=0.3, rely=0.5, relwidth=0.7, relheight=0.5)

+

+

+ def fill_options_Frame(self):

+ """Fill Frame with neccessary widgets"""

+ # -Create Widgets-

+

+

+ # Save as wav

+ self.options_wav_Radiobutton = ttk.Radiobutton(master=self.options_Frame,

+ text='WAV',

+ variable=self.saveFormat_var,

+ value='Wav'

+ )

+

+ # Save as flac

+ self.options_flac_Radiobutton = ttk.Radiobutton(master=self.options_Frame,

+ text='FLAC',

+ variable=self.saveFormat_var,

+ value='Flac'

+ )

+

+ # Save as mp3

+ self.options_mpThree_Radiobutton = ttk.Radiobutton(master=self.options_Frame,

+ text='MP3',

+ variable=self.saveFormat_var,

+ value='Mp3',

+ )

+

+ # -Column 1-

+

+ # Choose Conversion Method

+ self.options_aiModel_Label = tk.Label(master=self.options_Frame,

+ text='Choose Process Method', anchor=tk.CENTER,

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_aiModel_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.aiModel_var,

+ None, 'VR Architecture', 'MDX-Net', 'Ensemble Mode')

+ # Choose Instrumental Model

+ self.options_instrumentalModel_Label = tk.Label(master=self.options_Frame,

+ text='Choose Main Model',

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_instrumentalModel_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.instrumentalModel_var)

+ # Choose MDX-Net Model

+ self.options_mdxnetModel_Label = tk.Label(master=self.options_Frame,

+ text='Choose MDX-Net Model', anchor=tk.CENTER,

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+

+ self.options_mdxnetModel_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.mdxnetModel_var,

+ None, 'UVR-MDX-NET 1', 'UVR-MDX-NET 2', 'UVR-MDX-NET 3', 'UVR-MDX-NET Karaoke')

+ # Ensemble Mode

+ self.options_ensChoose_Label = tk.Label(master=self.options_Frame,

+ text='Choose Ensemble', anchor=tk.CENTER,

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_ensChoose_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.ensChoose_var,

+ None, 'MDX-Net/VR Ensemble', 'HP Models', 'Vocal Models', 'HP2 Models', 'All HP/HP2 Models', 'User Ensemble')

+

+ # Choose Agorithim

+ self.options_algo_Label = tk.Label(master=self.options_Frame,

+ text='Choose Algorithm', anchor=tk.CENTER,

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_algo_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.algo_var,

+ None, 'Vocals (Max Spec)', 'Instrumentals (Min Spec)')#, 'Invert (Normal)', 'Invert (Spectral)')

+

+

+ # -Column 2-

+

+ # WINDOW SIZE

+ self.options_winSize_Label = tk.Label(master=self.options_Frame,

+ text='Window Size', anchor=tk.CENTER,

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_winSize_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.winSize_var,

+ None, '320', '512','1024')

+ # MDX-chunks

+ self.options_chunks_Label = tk.Label(master=self.options_Frame,

+ text='Chunks',

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_chunks_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.chunks_var,

+ None, 'Auto', '1', '5', '10', '15', '20',

+ '25', '30', '35', '40', '45', '50',

+ '55', '60', '65', '70', '75', '80',

+ '85', '90', '95', 'Full')

+

+ #Checkboxes

+ # GPU Selection

+ self.options_gpu_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='GPU Conversion',

+ variable=self.gpuConversion_var,

+ )

+

+ # Vocal Only

+ self.options_voc_only_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Save Vocals Only',

+ variable=self.voc_only_var,

+ )

+ # Instrumental Only

+ self.options_inst_only_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Save Instrumental Only',

+ variable=self.inst_only_var,

+ )

+ # TTA

+ self.options_tta_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='TTA',

+ variable=self.tta_var,

+ )

+

+ # MDX-Auto-Chunk

+ self.options_non_red_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Save Noisey Vocal',

+ variable=self.non_red_var,

+ )

+

+ # Postprocessing

+ self.options_post_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Post-Process',

+ variable=self.postprocessing_var,

+ )

+

+ # -Column 3-

+

+ # AGG

+ self.options_agg_Label = tk.Label(master=self.options_Frame,

+ text='Aggression Setting',

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_agg_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.agg_var,

+ None, '1', '2', '3', '4', '5',

+ '6', '7', '8', '9', '10', '11',

+ '12', '13', '14', '15', '16', '17',

+ '18', '19', '20')

+

+ # MDX-noisereduc_s

+ self.options_noisereduc_s_Label = tk.Label(master=self.options_Frame,

+ text='Noise Reduction',

+ background='#0e0e0f', font=self.font, foreground='#13a4c9')

+ self.options_noisereduc_s_Optionmenu = ttk.OptionMenu(self.options_Frame,

+ self.noisereduc_s_var,

+ None, 'None', '0', '1', '2', '3', '4', '5',

+ '6', '7', '8', '9', '10', '11',

+ '12', '13', '14', '15', '16', '17',

+ '18', '19', '20')

+

+

+ # Save Image

+ self.options_image_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Output Image',

+ variable=self.outputImage_var,

+ )

+

+ # MDX-Enable Demucs Model

+ self.options_demucsmodel_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Demucs Model',

+ variable=self.demucsmodel_var,

+ )

+

+ # MDX-Noise Reduction

+ self.options_noisereduc_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Noise Reduction',

+ variable=self.noisereduc_var,

+ )

+

+ # Ensemble Save Ensemble Outputs

+ self.options_save_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Save All Outputs',

+ variable=self.save_var,

+ )

+

+ # Model Test Mode

+ self.options_modelFolder_Checkbutton = ttk.Checkbutton(master=self.options_Frame,

+ text='Model Test Mode',

+ variable=self.modelFolder_var,

+ )

+

+ # -Place Widgets-

+

+ # -Column 0-

+

+ # Save as

+ self.options_wav_Radiobutton.place(x=400, y=-5, width=0, height=6,

+ relx=0, rely=0/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_flac_Radiobutton.place(x=271, y=-5, width=0, height=6,

+ relx=1/3, rely=0/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_mpThree_Radiobutton.place(x=143, y=-5, width=0, height=6,

+ relx=2/3, rely=0/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ # -Column 1-

+

+ # Choose Conversion Method

+ self.options_aiModel_Label.place(x=0, y=0, width=0, height=-10,

+ relx=0, rely=2/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_aiModel_Optionmenu.place(x=0, y=-2, width=0, height=7,

+ relx=0, rely=3/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # Choose Main Model

+ self.options_instrumentalModel_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_instrumentalModel_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # Choose MDX-Net Model

+ self.options_mdxnetModel_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_mdxnetModel_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # Choose Ensemble

+ self.options_ensChoose_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_ensChoose_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+

+ # Choose Algorithm

+ self.options_algo_Label.place(x=20, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_algo_Optionmenu.place(x=12, y=-2, width=0, height=7,

+ relx=1/3, rely=3/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+

+ # -Column 2-

+

+ # WINDOW

+ self.options_winSize_Label.place(x=13, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_winSize_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=1/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #---MDX-Net Specific---

+ # MDX-chunks

+ self.options_chunks_Label.place(x=12, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_chunks_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=1/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Checkboxes

+

+ #GPU Conversion

+ self.options_gpu_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Vocals Only

+ self.options_voc_only_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Instrumental Only

+ self.options_inst_only_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ # TTA

+ self.options_tta_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # MDX-Keep Non_Reduced Vocal

+ self.options_non_red_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ # -Column 3-

+

+ # AGG

+ self.options_agg_Label.place(x=15, y=0, width=0, height=-10,

+ relx=2/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_agg_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=2/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # MDX-noisereduc_s

+ self.options_noisereduc_s_Label.place(x=15, y=0, width=0, height=-10,

+ relx=2/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_noisereduc_s_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=2/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Checkboxes

+ #---MDX-Net Specific---

+ # MDX-demucs Model

+ self.options_demucsmodel_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ #---VR Architecture Specific---

+ #Post-Process

+ self.options_post_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Save Image

+ # self.options_image_Checkbutton.place(x=35, y=21, width=0, height=5,

+ # relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #---Ensemble Specific---

+ #Ensemble Save Outputs

+ self.options_save_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #---MDX-Net & VR Architecture Specific---

+ #Model Test Mode

+ self.options_modelFolder_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ # Change States

+ self.aiModel_var.trace_add('write',

+ lambda *args: self.deselect_models())

+ self.ensChoose_var.trace_add('write',

+ lambda *args: self.update_states())

+

+ self.inst_only_var.trace_add('write',

+ lambda *args: self.update_states())

+

+ self.voc_only_var.trace_add('write',

+ lambda *args: self.update_states())

+ self.noisereduc_s_var.trace_add('write',

+ lambda *args: self.update_states())

+ self.non_red_var.trace_add('write',

+ lambda *args: self.update_states())

+

+ # Opening filedialogs

+ def open_file_filedialog(self):

+ """Make user select music files"""

+ global dnd

+ global nondnd

+

+ if self.lastDir is not None:

+ if not os.path.isdir(self.lastDir):

+ self.lastDir = None

+

+ paths = tk.filedialog.askopenfilenames(

+ parent=self,

+ title=f'Select Music Files',

+ initialfile='',

+ initialdir=self.lastDir,

+ )

+ if paths: # Path selected

+ self.inputPaths = paths

+ dnd = 'no'

+ self.update_inputPaths()

+ nondnd = os.path.dirname(paths[0])

+ print('last dir', self.lastDir)

+

+ def open_export_filedialog(self):

+ """Make user select a folder to export the converted files in"""

+ path = tk.filedialog.askdirectory(

+ parent=self,

+ title=f'Select Folder',)

+ if path: # Path selected

+ self.exportPath_var.set(path)

+

+ def open_exportPath_filedialog(self):

+ filename = self.exportPath_var.get()

+

+ if sys.platform == "win32":

+ os.startfile(filename)

+ else:

+ opener = "open" if sys.platform == "darwin" else "xdg-open"

+ subprocess.call([opener, filename])

+

+ def open_inputPath_filedialog(self):

+ """Open Input Directory"""

+

+ try:

+ if dnd == 'yes':

+ self.lastDir = str(dnddir)

+ filename = str(self.lastDir)

+ if sys.platform == "win32":

+ os.startfile(filename)

+ if dnd == 'no':

+ self.lastDir = str(nondnd)

+ filename = str(self.lastDir)

+

+ if sys.platform == "win32":

+ os.startfile(filename)

+ except:

+ filename = str(self.lastDir)

+

+ if sys.platform == "win32":

+ os.startfile(filename)

+

+ def start_conversion(self):

+ """

+ Start the conversion for all the given mp3 and wav files

+ """

+

+ # -Get all variables-

+ export_path = self.exportPath_var.get()

+ input_paths = self.inputPaths

+ instrumentalModel_path = self.instrumentalLabel_to_path[self.instrumentalModel_var.get()] # nopep8

+ # mdxnetModel_path = self.mdxnetLabel_to_path[self.mdxnetModel_var.get()]

+ # Get constants

+ instrumental = self.instrumentalModel_var.get()

+ try:

+ if [bool(instrumental)].count(True) == 2: #CHECKTHIS

+ window_size = DEFAULT_DATA['window_size']

+ agg = DEFAULT_DATA['agg']

+ chunks = DEFAULT_DATA['chunks']

+ noisereduc_s = DEFAULT_DATA['noisereduc_s']

+ mixing = DEFAULT_DATA['mixing']

+ else:

+ window_size = int(self.winSize_var.get())

+ agg = int(self.agg_var.get())

+ chunks = str(self.chunks_var.get())

+ noisereduc_s = str(self.noisereduc_s_var.get())

+ mixing = str(self.mixing_var.get())

+ ensChoose = str(self.ensChoose_var.get())

+ mdxnetModel = str(self.mdxnetModel_var.get())

+

+ except SyntaxError: # Non integer was put in entry box

+ tk.messagebox.showwarning(master=self,

+ title='Invalid Music File',

+ message='You have selected an invalid music file!\nPlease make sure that your files still exist and ends with either ".mp3", ".mp4", ".m4a", ".flac", ".wav"')

+ return

+

+ # -Check for invalid inputs-

+

+ for path in input_paths:

+ if not os.path.isfile(path):

+ tk.messagebox.showwarning(master=self,

+ title='Drag and Drop Feature Failed or Invalid Input',

+ message='The input is invalid, or the drag and drop feature failed to select your files properly.\n\nPlease try the following:\n\n1. Select your inputs using the \"Select Input\" button\n2. Verify the input is valid.\n3. Then try again.')

+ return

+

+

+ if self.aiModel_var.get() == 'VR Architecture':

+ if not os.path.isfile(instrumentalModel_path):

+ tk.messagebox.showwarning(master=self,

+ title='Invalid Main Model File',

+ message='You have selected an invalid main model file!\nPlease make sure that your model file still exists!')

+ return

+

+ if not os.path.isdir(export_path):

+ tk.messagebox.showwarning(master=self,

+ title='Invalid Export Directory',

+ message='You have selected an invalid export directory!\nPlease make sure that your directory still exists!')

+ return

+

+ if self.aiModel_var.get() == 'VR Architecture':

+ inference = inference_v5

+ elif self.aiModel_var.get() == 'Ensemble Mode':

+ inference = inference_v5_ensemble

+ elif self.aiModel_var.get() == 'MDX-Net':

+ inference = inference_MDX

+ else:

+ raise TypeError('This error should not occur.')

+

+ # -Run the algorithm-

+ threading.Thread(target=inference.main,

+ kwargs={

+ # Paths

+ 'input_paths': input_paths,

+ 'export_path': export_path,

+ 'saveFormat': self.saveFormat_var.get(),

+ # Processing Options

+ 'gpu': 0 if self.gpuConversion_var.get() else -1,

+ 'postprocess': self.postprocessing_var.get(),

+ 'tta': self.tta_var.get(),

+ 'save': self.save_var.get(),

+ 'output_image': self.outputImage_var.get(),

+ 'algo': self.algo_var.get(),

+ # Models

+ 'instrumentalModel': instrumentalModel_path,

+ 'vocalModel': '', # Always not needed

+ 'useModel': 'instrumental', # Always instrumental

+ # Model Folder

+ 'modelFolder': self.modelFolder_var.get(),

+ # Constants

+ 'window_size': window_size,

+ 'agg': agg,

+ 'break': False,

+ 'ensChoose': ensChoose,

+ 'mdxnetModel': mdxnetModel,

+ # Other Variables (Tkinter)

+ 'window': self,

+ 'text_widget': self.command_Text,

+ 'button_widget': self.conversion_Button,

+ 'inst_menu': self.options_instrumentalModel_Optionmenu,

+ 'progress_var': self.progress_var,

+ # MDX-Net Specific

+ 'demucsmodel': self.demucsmodel_var.get(),

+ 'non_red': self.non_red_var.get(),

+ 'noise_reduc': self.noisereduc_var.get(),

+ 'voc_only': self.voc_only_var.get(),

+ 'inst_only': self.inst_only_var.get(),

+ 'chunks': chunks,

+ 'noisereduc_s': noisereduc_s,

+ 'mixing': mixing,

+ },

+ daemon=True

+ ).start()

+

+ # Models

+ def update_inputPaths(self):

+ """Update the music file entry"""

+ if self.inputPaths:

+ # Non-empty Selection

+ text = '; '.join(self.inputPaths)

+ else:

+ # Empty Selection

+ text = ''

+ self.inputPathsEntry_var.set(text)

+

+

+

+ def update_loop(self):

+ """Update the dropdown menu"""

+ self.update_available_models()

+

+ self.after(3000, self.update_loop)

+

+ def update_available_models(self):

+ """

+ Loop through every model (.pth) in the models directory

+ and add to the select your model list

+ """

+ temp_instrumentalModels_dir = os.path.join(instrumentalModels_dir, 'Main_Models') # nopep8

+

+ # Main models

+ new_InstrumentalModels = os.listdir(temp_instrumentalModels_dir)

+ if new_InstrumentalModels != self.lastInstrumentalModels:

+ self.instrumentalLabel_to_path.clear()

+ self.options_instrumentalModel_Optionmenu['menu'].delete(0, 'end')

+ for file_name in new_InstrumentalModels:

+ if file_name.endswith('.pth'):

+ # Add Radiobutton to the Options Menu

+ self.options_instrumentalModel_Optionmenu['menu'].add_radiobutton(label=file_name,

+ command=tk._setit(self.instrumentalModel_var, file_name))

+ # Link the files name to its absolute path

+ self.instrumentalLabel_to_path[file_name] = os.path.join(temp_instrumentalModels_dir, file_name) # nopep8

+ self.lastInstrumentalModels = new_InstrumentalModels

+

+ def update_states(self):

+ """

+ Vary the states for all widgets based

+ on certain selections

+ """

+

+ if self.aiModel_var.get() == 'MDX-Net':

+ # Place Widgets

+

+ # Choose MDX-Net Model

+ self.options_mdxnetModel_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_mdxnetModel_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # MDX-chunks

+ self.options_chunks_Label.place(x=12, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_chunks_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=1/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # MDX-noisereduc_s

+ self.options_noisereduc_s_Label.place(x=15, y=0, width=0, height=-10,

+ relx=2/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_noisereduc_s_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=2/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #GPU Conversion

+ self.options_gpu_Checkbutton.configure(state=tk.NORMAL)

+ self.options_gpu_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Vocals Only

+ self.options_voc_only_Checkbutton.configure(state=tk.NORMAL)

+ self.options_voc_only_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Instrumental Only

+ self.options_inst_only_Checkbutton.configure(state=tk.NORMAL)

+ self.options_inst_only_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # MDX-demucs Model

+ self.options_demucsmodel_Checkbutton.configure(state=tk.NORMAL)

+ self.options_demucsmodel_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ # MDX-Keep Non_Reduced Vocal

+ self.options_non_red_Checkbutton.configure(state=tk.NORMAL)

+ self.options_non_red_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Model Test Mode

+ self.options_modelFolder_Checkbutton.configure(state=tk.NORMAL)

+ self.options_modelFolder_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+

+ # Forget widgets

+ self.options_ensChoose_Label.place_forget()

+ self.options_ensChoose_Optionmenu.place_forget()

+ self.options_instrumentalModel_Label.place_forget()

+ self.options_instrumentalModel_Optionmenu.place_forget()

+ self.options_save_Checkbutton.configure(state=tk.DISABLED)

+ self.options_save_Checkbutton.place_forget()

+ self.options_post_Checkbutton.configure(state=tk.DISABLED)

+ self.options_post_Checkbutton.place_forget()

+ self.options_tta_Checkbutton.configure(state=tk.DISABLED)

+ self.options_tta_Checkbutton.place_forget()

+ # self.options_image_Checkbutton.configure(state=tk.DISABLED)

+ # self.options_image_Checkbutton.place_forget()

+ self.options_winSize_Label.place_forget()

+ self.options_winSize_Optionmenu.place_forget()

+ self.options_agg_Label.place_forget()

+ self.options_agg_Optionmenu.place_forget()

+ self.options_algo_Label.place_forget()

+ self.options_algo_Optionmenu.place_forget()

+

+

+ elif self.aiModel_var.get() == 'VR Architecture':

+ #Keep for Ensemble & VR Architecture Mode

+ # Choose Main Model

+ self.options_instrumentalModel_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_instrumentalModel_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # WINDOW

+ self.options_winSize_Label.place(x=13, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_winSize_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=1/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # AGG

+ self.options_agg_Label.place(x=15, y=0, width=0, height=-10,

+ relx=2/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_agg_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=2/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #GPU Conversion

+ self.options_gpu_Checkbutton.configure(state=tk.NORMAL)

+ self.options_gpu_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Vocals Only

+ self.options_voc_only_Checkbutton.configure(state=tk.NORMAL)

+ self.options_voc_only_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Instrumental Only

+ self.options_inst_only_Checkbutton.configure(state=tk.NORMAL)

+ self.options_inst_only_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=1/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # TTA

+ self.options_tta_Checkbutton.configure(state=tk.NORMAL)

+ self.options_tta_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Post-Process

+ self.options_post_Checkbutton.configure(state=tk.NORMAL)

+ self.options_post_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Save Image

+ # self.options_image_Checkbutton.configure(state=tk.NORMAL)

+ # self.options_image_Checkbutton.place(x=35, y=21, width=0, height=5,

+ # relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Model Test Mode

+ self.options_modelFolder_Checkbutton.configure(state=tk.NORMAL)

+ self.options_modelFolder_Checkbutton.place(x=35, y=21, width=0, height=5,

+ relx=2/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Forget Widgets

+ self.options_ensChoose_Label.place_forget()

+ self.options_ensChoose_Optionmenu.place_forget()

+ self.options_chunks_Label.place_forget()

+ self.options_chunks_Optionmenu.place_forget()

+ self.options_noisereduc_s_Label.place_forget()

+ self.options_noisereduc_s_Optionmenu.place_forget()

+ self.options_mdxnetModel_Label.place_forget()

+ self.options_mdxnetModel_Optionmenu.place_forget()

+ self.options_algo_Label.place_forget()

+ self.options_algo_Optionmenu.place_forget()

+ self.options_save_Checkbutton.configure(state=tk.DISABLED)

+ self.options_save_Checkbutton.place_forget()

+ self.options_non_red_Checkbutton.configure(state=tk.DISABLED)

+ self.options_non_red_Checkbutton.place_forget()

+ self.options_noisereduc_Checkbutton.configure(state=tk.DISABLED)

+ self.options_noisereduc_Checkbutton.place_forget()

+ self.options_demucsmodel_Checkbutton.configure(state=tk.DISABLED)

+ self.options_demucsmodel_Checkbutton.place_forget()

+ self.options_non_red_Checkbutton.configure(state=tk.DISABLED)

+ self.options_non_red_Checkbutton.place_forget()

+

+ elif self.aiModel_var.get() == 'Ensemble Mode':

+ if self.ensChoose_var.get() == 'User Ensemble':

+ # Choose Algorithm

+ self.options_algo_Label.place(x=20, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_algo_Optionmenu.place(x=12, y=-2, width=0, height=7,

+ relx=1/3, rely=3/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # Choose Ensemble

+ self.options_ensChoose_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_ensChoose_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # Forget Widgets

+ self.options_save_Checkbutton.configure(state=tk.DISABLED)

+ self.options_save_Checkbutton.place_forget()

+ self.options_post_Checkbutton.configure(state=tk.DISABLED)

+ self.options_post_Checkbutton.place_forget()

+ self.options_tta_Checkbutton.configure(state=tk.DISABLED)

+ self.options_tta_Checkbutton.place_forget()

+ self.options_modelFolder_Checkbutton.configure(state=tk.DISABLED)

+ self.options_modelFolder_Checkbutton.place_forget()

+ # self.options_image_Checkbutton.configure(state=tk.DISABLED)

+ # self.options_image_Checkbutton.place_forget()

+ self.options_gpu_Checkbutton.configure(state=tk.DISABLED)

+ self.options_gpu_Checkbutton.place_forget()

+ self.options_voc_only_Checkbutton.configure(state=tk.DISABLED)

+ self.options_voc_only_Checkbutton.place_forget()

+ self.options_inst_only_Checkbutton.configure(state=tk.DISABLED)

+ self.options_inst_only_Checkbutton.place_forget()

+ self.options_demucsmodel_Checkbutton.configure(state=tk.DISABLED)

+ self.options_demucsmodel_Checkbutton.place_forget()

+ self.options_noisereduc_Checkbutton.configure(state=tk.DISABLED)

+ self.options_noisereduc_Checkbutton.place_forget()

+ self.options_non_red_Checkbutton.configure(state=tk.DISABLED)

+ self.options_non_red_Checkbutton.place_forget()

+ self.options_chunks_Label.place_forget()

+ self.options_chunks_Optionmenu.place_forget()

+ self.options_noisereduc_s_Label.place_forget()

+ self.options_noisereduc_s_Optionmenu.place_forget()

+ self.options_mdxnetModel_Label.place_forget()

+ self.options_mdxnetModel_Optionmenu.place_forget()

+ self.options_winSize_Label.place_forget()

+ self.options_winSize_Optionmenu.place_forget()

+ self.options_agg_Label.place_forget()

+ self.options_agg_Optionmenu.place_forget()

+

+ elif self.ensChoose_var.get() == 'MDX-Net/VR Ensemble':

+ # Choose Ensemble

+ self.options_ensChoose_Label.place(x=0, y=19, width=0, height=-10,

+ relx=0, rely=6/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ self.options_ensChoose_Optionmenu.place(x=0, y=19, width=0, height=7,

+ relx=0, rely=7/self.COL1_ROWS, relwidth=1/3, relheight=1/self.COL1_ROWS)

+ # MDX-chunks

+ self.options_chunks_Label.place(x=12, y=0, width=0, height=-10,

+ relx=1/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_chunks_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=1/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # MDX-noisereduc_s

+ self.options_noisereduc_s_Label.place(x=15, y=0, width=0, height=-10,

+ relx=2/3, rely=2/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_noisereduc_s_Optionmenu.place(x=71, y=-2, width=-118, height=7,

+ relx=2/3, rely=3/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # WINDOW

+ self.options_winSize_Label.place(x=13, y=-7, width=0, height=-10,

+ relx=1/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_winSize_Optionmenu.place(x=71, y=-5, width=-118, height=7,

+ relx=1/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ # AGG

+ self.options_agg_Label.place(x=15, y=-7, width=0, height=-10,

+ relx=2/3, rely=5/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ self.options_agg_Optionmenu.place(x=71, y=-5, width=-118, height=7,

+ relx=2/3, rely=6/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #GPU Conversion

+ self.options_gpu_Checkbutton.configure(state=tk.NORMAL)

+ self.options_gpu_Checkbutton.place(x=35, y=3, width=0, height=5,

+ relx=1/3, rely=7/self.COL2_ROWS, relwidth=1/3, relheight=1/self.COL2_ROWS)

+ #Vocals Only

+ self.options_voc_only_Checkbutton.configure(state=tk.NORMAL)