* Optimize latency (#1259) * add attribute: configs/config.py Optimize latency: tools/rvc_for_realtime.py * new file: assets/Synthesizer_inputs.pth * fix: configs/config.py fix: tools/rvc_for_realtime.py * fix bug: infer/lib/infer_pack/models.py * new file: assets/hubert_inputs.pth new file: assets/rmvpe_inputs.pth modified: configs/config.py new features: infer/lib/rmvpe.py new features: tools/jit_export/__init__.py new features: tools/jit_export/get_hubert.py new features: tools/jit_export/get_rmvpe.py new features: tools/jit_export/get_synthesizer.py optimize: tools/rvc_for_realtime.py * optimize: tools/jit_export/get_synthesizer.py fix bug: tools/jit_export/__init__.py * Fixed a bug caused by using half on the CPU: infer/lib/rmvpe.py Fixed a bug caused by using half on the CPU: tools/jit_export/__init__.py Fixed CIRCULAR IMPORT: tools/jit_export/get_rmvpe.py Fixed CIRCULAR IMPORT: tools/jit_export/get_synthesizer.py Fixed a bug caused by using half on the CPU: tools/rvc_for_realtime.py * Remove useless code: infer/lib/rmvpe.py * Delete gui_v1 copy.py * Delete .vscode/launch.json * Delete jit_export_test.py * Delete tools/rvc_for_realtime copy.py * Delete configs/config.json * Delete .gitignore * Fix exceptions caused by switching inference devices: infer/lib/rmvpe.py Fix exceptions caused by switching inference devices: tools/jit_export/__init__.py Fix exceptions caused by switching inference devices: tools/rvc_for_realtime.py * restore * replace(you can undo this commit) * remove debug_print --------- Co-authored-by: Ftps <ftpsflandre@gmail.com> * Fixed some bugs when exporting ONNX model (#1254) * fix import (#1280) * fix import * lint * 🎨 同步 locale (#1242) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * Fix jit load and import issue (#1282) * fix jit model loading : infer/lib/rmvpe.py * modified: assets/hubert/.gitignore move file: assets/hubert_inputs.pth -> assets/hubert/hubert_inputs.pth modified: assets/rmvpe/.gitignore move file: assets/rmvpe_inputs.pth -> assets/rmvpe/rmvpe_inputs.pth fix import: gui_v1.py * feat(workflow): trigger on dev * feat(workflow): add close-pr on non-dev branch * Add input wav and delay time monitor for real-time gui (#1293) * feat(workflow): trigger on dev * feat(workflow): add close-pr on non-dev branch * 🎨 同步 locale (#1289) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * feat: edit PR template * add input wav and delay time monitor --------- Co-authored-by: 源文雨 <41315874+fumiama@users.noreply.github.com> Co-authored-by: github-actions[bot] <41898282+github-actions[bot]@users.noreply.github.com> Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> Co-authored-by: RVC-Boss <129054828+RVC-Boss@users.noreply.github.com> * Optimize latency using scripted jit (#1291) * feat(workflow): trigger on dev * feat(workflow): add close-pr on non-dev branch * 🎨 同步 locale (#1289) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * feat: edit PR template * Optimize-latency-using-scripted: configs/config.py Optimize-latency-using-scripted: infer/lib/infer_pack/attentions.py Optimize-latency-using-scripted: infer/lib/infer_pack/commons.py Optimize-latency-using-scripted: infer/lib/infer_pack/models.py Optimize-latency-using-scripted: infer/lib/infer_pack/modules.py Optimize-latency-using-scripted: infer/lib/jit/__init__.py Optimize-latency-using-scripted: infer/lib/jit/get_hubert.py Optimize-latency-using-scripted: infer/lib/jit/get_rmvpe.py Optimize-latency-using-scripted: infer/lib/jit/get_synthesizer.py Optimize-latency-using-scripted: infer/lib/rmvpe.py Optimize-latency-using-scripted: tools/rvc_for_realtime.py * modified: infer/lib/infer_pack/models.py * fix some bug: configs/config.py fix some bug: infer/lib/infer_pack/models.py fix some bug: infer/lib/rmvpe.py * Fixed abnormal reference of logger in multiprocessing: infer/modules/train/train.py --------- Co-authored-by: 源文雨 <41315874+fumiama@users.noreply.github.com> Co-authored-by: github-actions[bot] <41898282+github-actions[bot]@users.noreply.github.com> Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * Format code (#1298) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * 🎨 同步 locale (#1299) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * feat: optimize actions * feat(workflow): add sync dev * feat: optimize actions * feat: optimize actions * feat: optimize actions * feat: optimize actions * feat: add jit options (#1303) Delete useless code: infer/lib/jit/get_synthesizer.py Optimized code: tools/rvc_for_realtime.py * Code refactor + re-design inference ui (#1304) * Code refacor + re-design inference ui * Fix tabname * i18n jp --------- Co-authored-by: Ftps <ftpsflandre@gmail.com> * feat: optimize actions * feat: optimize actions * Update README & en_US locale file (#1309) * critical: some bug fixes (#1322) * JIT acceleration switch does not support hot update * fix padding bug of rmvpe in torch-directml * fix padding bug of rmvpe in torch-directml * Fix STFT under torch_directml (#1330) * chore(format): run black on dev (#1318) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * chore(i18n): sync locale on dev (#1317) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * feat: allow for tta to be passed to uvr (#1361) * chore(format): run black on dev (#1373) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * Added script for automatically download all needed models at install (#1366) * Delete modules.py * Add files via upload * Add files via upload * Add files via upload * Add files via upload * chore(i18n): sync locale on dev (#1377) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * chore(format): run black on dev (#1376) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * Update IPEX library (#1362) * Update IPEX library * Update ipex index * chore(format): run black on dev (#1378) Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> * Update Changelog_CN.md * Update Changelog_CN.md * Update Changelog_EN.md * Update README.md * Update README.en.md * Update README.md --------- Co-authored-by: Chengjia Jiang <46401978+ChasonJiang@users.noreply.github.com> Co-authored-by: Ftps <ftpsflandre@gmail.com> Co-authored-by: shizuku_nia <102004222+ShizukuNia@users.noreply.github.com> Co-authored-by: Ftps <63702646+Tps-F@users.noreply.github.com> Co-authored-by: github-actions[bot] <41898282+github-actions[bot]@users.noreply.github.com> Co-authored-by: github-actions[bot] <github-actions[bot]@users.noreply.github.com> Co-authored-by: 源文雨 <41315874+fumiama@users.noreply.github.com> Co-authored-by: yxlllc <33565655+yxlllc@users.noreply.github.com> Co-authored-by: RVC-Boss <129054828+RVC-Boss@users.noreply.github.com> Co-authored-by: Blaise <133521603+blaise-tk@users.noreply.github.com> Co-authored-by: Rice Cake <gak141808@gmail.com> Co-authored-by: AWAS666 <33494149+AWAS666@users.noreply.github.com> Co-authored-by: Dmitry <nda2911@yandex.ru> Co-authored-by: Disty0 <47277141+Disty0@users.noreply.github.com>

195 lines

8.3 KiB

Markdown

195 lines

8.3 KiB

Markdown

<div align="center">

|

||

|

||

<h1>Retrieval-based-Voice-Conversion-WebUI</h1>

|

||

An easy-to-use Voice Conversion framework based on VITS.<br><br>

|

||

|

||

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI)

|

||

|

||

<img src="https://counter.seku.su/cmoe?name=rvc&theme=r34" /><br>

|

||

|

||

[](https://colab.research.google.com/github/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/Retrieval_based_Voice_Conversion_WebUI.ipynb)

|

||

[](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/LICENSE)

|

||

[](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/)

|

||

|

||

[](https://discord.gg/HcsmBBGyVk)

|

||

|

||

</div>

|

||

|

||

------

|

||

[**Changelog**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/Changelog_EN.md) | [**FAQ (Frequently Asked Questions)**](https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/wiki/FAQ-(Frequently-Asked-Questions))

|

||

|

||

[**English**](../en/README.en.md) | [**中文简体**](../../README.md) | [**日本語**](../jp/README.ja.md) | [**한국어**](../kr/README.ko.md) ([**韓國語**](../kr/README.ko.han.md)) | [**Türkçe**](../tr/README.tr.md)

|

||

|

||

|

||

Check our [Demo Video](https://www.bilibili.com/video/BV1pm4y1z7Gm/) here!

|

||

|

||

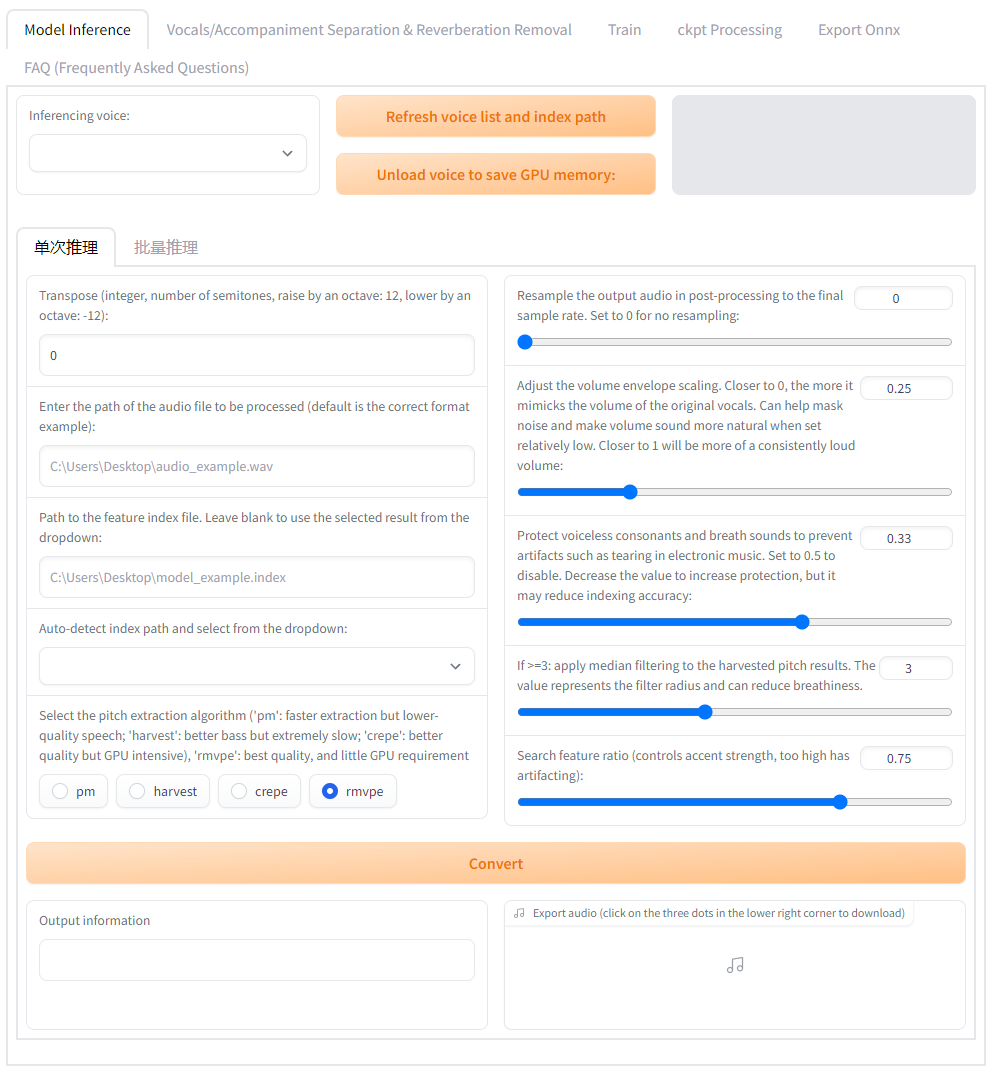

Training/Inference WebUI:go-web.bat

|

||

|

||

|

||

|

||

Realtime Voice Conversion GUI:go-realtime-gui.bat

|

||

|

||

|

||

|

||

> The dataset for the pre-training model uses nearly 50 hours of high quality VCTK open source dataset.

|

||

|

||

> High quality licensed song datasets will be added to training-set one after another for your use, without worrying about copyright infringement.

|

||

|

||

> Please look forward to the pretrained base model of RVCv3, which has larger parameters, more training data, better results, unchanged inference speed, and requires less training data for training.

|

||

|

||

## Summary

|

||

This repository has the following features:

|

||

+ Reduce tone leakage by replacing the source feature to training-set feature using top1 retrieval;

|

||

+ Easy and fast training, even on relatively poor graphics cards;

|

||

+ Training with a small amount of data also obtains relatively good results (>=10min low noise speech recommended);

|

||

+ Supporting model fusion to change timbres (using ckpt processing tab->ckpt merge);

|

||

+ Easy-to-use Webui interface;

|

||

+ Use the UVR5 model to quickly separate vocals and instruments.

|

||

+ Use the most powerful High-pitch Voice Extraction Algorithm [InterSpeech2023-RMVPE](#Credits) to prevent the muted sound problem. Provides the best results (significantly) and is faster, with even lower resource consumption than Crepe_full.

|

||

+ AMD/Intel graphics cards acceleration supported.

|

||

+ Intel ARC graphics cards acceleration with IPEX supported.

|

||

|

||

## Preparing the environment

|

||

The following commands need to be executed in the environment of Python version 3.8 or higher.

|

||

|

||

(Windows/Linux)

|

||

First install the main dependencies through pip:

|

||

```bash

|

||

# Install PyTorch-related core dependencies, skip if installed

|

||

# Reference: https://pytorch.org/get-started/locally/

|

||

pip install torch torchvision torchaudio

|

||

|

||

#For Windows + Nvidia Ampere Architecture(RTX30xx), you need to specify the cuda version corresponding to pytorch according to the experience of https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/issues/21

|

||

#pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

|

||

|

||

#For Linux + AMD Cards, you need to use the following pytorch versions:

|

||

#pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/rocm5.4.2

|

||

```

|

||

|

||

Then can use poetry to install the other dependencies:

|

||

```bash

|

||

# Install the Poetry dependency management tool, skip if installed

|

||

# Reference: https://python-poetry.org/docs/#installation

|

||

curl -sSL https://install.python-poetry.org | python3 -

|

||

|

||

# Install the project dependencies

|

||

poetry install

|

||

```

|

||

|

||

You can also use pip to install them:

|

||

```bash

|

||

|

||

for Nvidia graphics cards

|

||

pip install -r requirements.txt

|

||

|

||

for AMD/Intel graphics cards on Windows (DirectML):

|

||

pip install -r requirements-dml.txt

|

||

|

||

for Intel ARC graphics cards on Linux / WSL using Python 3.10:

|

||

pip install -r requirements-ipex.txt

|

||

|

||

for AMD graphics cards on Linux (ROCm):

|

||

pip install -r requirements-amd.txt

|

||

```

|

||

|

||

------

|

||

Mac users can install dependencies via `run.sh`:

|

||

```bash

|

||

sh ./run.sh

|

||

```

|

||

|

||

## Preparation of other Pre-models

|

||

RVC requires other pre-models to infer and train.

|

||

|

||

```bash

|

||

#Download all needed models from https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/

|

||

python tools/download_models.py

|

||

```

|

||

|

||

Or just download them by yourself from our [Huggingface space](https://huggingface.co/lj1995/VoiceConversionWebUI/tree/main/).

|

||

|

||

Here's a list of Pre-models and other files that RVC needs:

|

||

```bash

|

||

./assets/hubert/hubert_base.pt

|

||

|

||

./assets/pretrained

|

||

|

||

./assets/uvr5_weights

|

||

|

||

Additional downloads are required if you want to test the v2 version of the model.

|

||

|

||

./assets/pretrained_v2

|

||

|

||

If you want to test the v2 version model (the v2 version model has changed the input from the 256 dimensional feature of 9-layer Hubert+final_proj to the 768 dimensional feature of 12-layer Hubert, and has added 3 period discriminators), you will need to download additional features

|

||

|

||

./assets/pretrained_v2

|

||

|

||

#If you are using Windows, you may also need these two files, skip if FFmpeg and FFprobe are installed

|

||

ffmpeg.exe

|

||

|

||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffmpeg.exe

|

||

|

||

ffprobe.exe

|

||

|

||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/ffprobe.exe

|

||

|

||

If you want to use the latest SOTA RMVPE vocal pitch extraction algorithm, you need to download the RMVPE weights and place them in the RVC root directory

|

||

|

||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.pt

|

||

|

||

For AMD/Intel graphics cards users you need download:

|

||

|

||

https://huggingface.co/lj1995/VoiceConversionWebUI/blob/main/rmvpe.onnx

|

||

|

||

```

|

||

|

||

Intel ARC graphics cards users needs to run `source /opt/intel/oneapi/setvars.sh` command before starting Webui.

|

||

|

||

Then use this command to start Webui:

|

||

```bash

|

||

python infer-web.py

|

||

```

|

||

|

||

If you are using Windows or macOS, you can download and extract `RVC-beta.7z` to use RVC directly by using `go-web.bat` on windows or `sh ./run.sh` on macOS to start Webui.

|

||

|

||

## ROCm Support for AMD graphic cards (Linux only)

|

||

To use ROCm on Linux install all required drivers as described [here](https://rocm.docs.amd.com/en/latest/deploy/linux/os-native/install.html).

|

||

|

||

On Arch use pacman to install the driver:

|

||

````

|

||

pacman -S rocm-hip-sdk rocm-opencl-sdk

|

||

````

|

||

|

||

You might also need to set these environment variables (e.g. on a RX6700XT):

|

||

````

|

||

export ROCM_PATH=/opt/rocm

|

||

export HSA_OVERRIDE_GFX_VERSION=10.3.0

|

||

````

|

||

Also make sure your user is part of the `render` and `video` group:

|

||

````

|

||

sudo usermod -aG render $USERNAME

|

||

sudo usermod -aG video $USERNAME

|

||

````

|

||

After that you can run the WebUI:

|

||

```bash

|

||

python infer-web.py

|

||

```

|

||

|

||

## Credits

|

||

+ [ContentVec](https://github.com/auspicious3000/contentvec/)

|

||

+ [VITS](https://github.com/jaywalnut310/vits)

|

||

+ [HIFIGAN](https://github.com/jik876/hifi-gan)

|

||

+ [Gradio](https://github.com/gradio-app/gradio)

|

||

+ [FFmpeg](https://github.com/FFmpeg/FFmpeg)

|

||

+ [Ultimate Vocal Remover](https://github.com/Anjok07/ultimatevocalremovergui)

|

||

+ [audio-slicer](https://github.com/openvpi/audio-slicer)

|

||

+ [Vocal pitch extraction:RMVPE](https://github.com/Dream-High/RMVPE)

|

||

+ The pretrained model is trained and tested by [yxlllc](https://github.com/yxlllc/RMVPE) and [RVC-Boss](https://github.com/RVC-Boss).

|

||

|

||

## Thanks to all contributors for their efforts

|

||

<a href="https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/graphs/contributors" target="_blank">

|

||

<img src="https://contrib.rocks/image?repo=RVC-Project/Retrieval-based-Voice-Conversion-WebUI" />

|

||

</a>

|

||

|